(Firstly, this is information relates only to OLAP 10gR2. There are a number of changes within OLAP11gR1 and the following scenario has not been tested with 11g)

When you venture into most supermarkets today, somewhere on one of the many isles there will be a BOGOF – buy one get one free. Well how about an OLAP offer: “IOGALFF” – install one get at least fourteen free. I admit it is not quite as catchy as the original, but the benefits are huge and it has the result of making life so much easier.One of the more interesting challenges of using Analytic Workspace Manager (and also OWB) relates to managing all the calculated measures within a cube. Every measure should really be accompanied by a standard set of calculated measures such as:

- Current Period

- Last Year

- Last Year %

- Prior Period

- Prior Period %

- Year to Date

- Year to Date Last Year

- Year to Date Last Year %

- Quarter to Date

- Quarter to Date Last Year

- Quarter to Date Last Year %

- 3-Month Moving Average

- 6-Month Moving Average

- 12-Month Moving Average

- Dimension “A” Share of All Members

- Dimension “A” Share of Parent

Its is the addition of these types of measures that adds real value to the your BI application. As I have stated many times before: business users are not interested in looking at data from base measures, typically they are more interested in trends based on the prior period or prior year, share of revenue, moving averages and so on. This adds a lot of work for the cube designer as they need to add at least 14 calculated measures for each base measure as well as two additional calculated share measures for each dimension associated with each cube.

Which adds up to a lot of measures and manually creating all these measure is likely to take a considerable amount of time. If we take the command schema sample that is shipped with BI10g, this contains 5 base measures (costs, quantity, revenue, margin, price) with 4 dimensions (channel, geography, product, time), which translates to

5 * 14 calculated measures

+

5 * 3 (dimensions - channel, geography, product) * 2 calculated share measures

= 100 calculated measures.

As I said, it is very easy to generate a lot of calculated measures.

So is there a more intelligent way of managing calculated measures? What is the quickest way to add all these additional calculations to a cube? One way is to use the Excel Accelerator to add the calculated measures to your AW. It is relatively easy to use and allows you to use normal Excel tools such as cut & paste to quickly create all the calculations you could ever need. You can download the Excel Accelerator from the OLAP OTN Home Page, or by clicking here. Look for these links:

Creating OLAP Calculations using Excel

Creating OLAP Calculations using Exce Readme

During a recent POC, we used a more interesting approach that takes the use of customer calculations to a new level. In many ways this goes back to the various techniques we used when creating Express databases for use with Sales Analyzer to make life easier for both administrators and users. The approach is quite simple – use and additional dimension to define the type of calculation you want to execute and link this to a DML program to return the required results. The list of calculated measures listed above are converted into dimension members, which provides another way to slice, dice and pivot the data. As you can see here, the dimension member that identifies the type of calculation is in the row edge with the measure in the column edge. In this way, only one calculation is required which is used across all the available measures.

What this means is: by installing one simple (well, everything is relative) calculated measure you can get upto fourteen additional calculations for free. Hence the acronym “IOGALFF” – install one get at least fourteen free.

How is all this managed? There are five basic steps to creating this type of reporting solution:

- Create a new dimension to control the types of calculations returned

- Add some additional attributes to the time dimension to help manage some of the time series arguments for specific calculations

- Create an OLAP DML program to return the data

- Create a new cube that includes the new calculation type dimension and add a calculated measure that calls the OLAP DML program to return the required data

- Create a SQL View over the cube

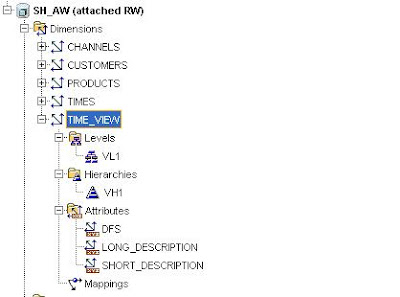

Step 1 – Creating the new Time View dimension

I have called the dimension containing the list of calculated members, Time View (mainly because the majority of the calculations are time based but may be a better name would be "measure view" ?). The Time View dimension has one level and one hierarchy with three attributes:

- Long Label

- Short Label

- Default sort order

The source data looks like this:

With the source table definition as follows:

CREATE TABLE TIME_VIEW

(TIME_VIEW_ID VARCHAR2(2 BYTE),

TIME_VIEW_DESC VARCHAR2(35 BYTE),

SORT_ORDER NUMBER(*,0));

And the view definition as follows:

CREATE OR REPLACE FORCE VIEW VW_TIME_VIEW AS

SELECT

TIME_VIEW_ID

, TIME_VIEW_DESC

, SORT_ORDER

FROM TIME_VIEW

ORDER BY SORT_ORDER;

To populate the base table the following commands are used:

INSERT INTO TIME_VIEW VALUES ('1', 'Current Period', '1')

INSERT INTO TIME_VIEW VALUES ('2', 'Last Year', '2')

INSERT INTO TIME_VIEW VALUES ('3', 'Last Year %', '3')

INSERT INTO TIME_VIEW VALUES ('4', 'Prior Period', '4')

INSERT INTO TIME_VIEW VALUES ('5', 'Prior Period %', '5')

INSERT INTO TIME_VIEW VALUES ('6', 'Year To Date', '6')

INSERT INTO TIME_VIEW VALUES ('7', 'Year To Date Last Year', '7')

INSERT INTO TIME_VIEW VALUES ('8', 'Year To Date Last Year %', '8')

INSERT INTO TIME_VIEW VALUES ('9', 'Quarter To Date', '9')

INSERT INTO TIME_VIEW VALUES ('10', 'Quarter To Date Last Year', '10')

INSERT INTO TIME_VIEW VALUES ('11', 'Quarter To Date Last Year %', '11')

INSERT INTO TIME_VIEW VALUES ('12', '3-Month Moving Average', '12')

INSERT INTO TIME_VIEW VALUES ('13', '6-Month Moving Average', '13')

INSERT INTO TIME_VIEW VALUES ('14', '12-Month Moving Average', '14')

After this, the next set of values depends on the dimensions within your data model. For my model, I have three additional dimensions that I want to analyze: Channel, Product and Customer. For each of these dimensions I want to see the % share of each member in relation to the value for all members (i.e. the top level) and the shared based on the parent value. To enable these calculations I add the following lines to the TIME_VIEW table:

INSERT INTO TIME_VIEW VALUES ('15', ' Product Share of All Products', '15')

INSERT INTO TIME_VIEW VALUES ('16', ' Product Share of Parent', '16')

INSERT INTO TIME_VIEW VALUES ('17', ' Channel Share of All Channels', '17')

INSERT INTO TIME_VIEW VALUES ('18', ' Channel Share of Parent', '18')

INSERT INTO TIME_VIEW VALUES ('19', ' Customer Share of All Customers', '19')

INSERT INTO TIME_VIEW VALUES ('20', ' Customer Share of Parent', '20')

(Obviously, these additional share calculations are not really related to time, so using the name Time View is sort of confusing and in hindsight the term Measure View would have been a better name for the dimension). Once the table has been defined, it can be mapped in AWM to create the dimension mapping (in this case I am using a view over the source

The last part of this step is to then load the members into the dimension using the dimension data load wizard or via the SQL command line using a load script. The result should look like this when you view the members using the Dimension Data Viewer:

As can be seen here, there are fourteen base calculations that can be applied to any model, plus the additional share calculations that are based on the dimensions within the source cube. Hence the title “IOGALFF” – install one get fourteen free. In reality it is more like: install one and as many calculations as you like or need, but that translates to “IOGASMAYNORL” which does not really role off the tongue.

Step 2 – Updating the Time Dimension with new Attributes.

To make the program that delivers all these calculated measures as simple as possible a number of attributes are added to the time dimension:

- Lag Prior Year

- At the Year level this equates to 1

- At the Quarter level this equates to 4

- At the Month level this equates to 12

- At the Day level it is the number of days in the year (365 or 366)

- Parent Quarter

- This is the quarter for each time period and used as the reset trigger for the cumulative totals

- Parent Year

- This is the year for each time period and used as the reset trigger for the cumulative totals

The information for the two parent attributes is taken from existing columns within the source data since this information already exists and the Lag Prior Year is a simple hard-coded value apart from the day level where the timespan value for the year is used to cope with leap years. Therefore, all the information for these three attributes should be readily available within your source data, especially if you are using the OWB Time Wizard to create your time dimension. Obviously using a view over the source table for the mapping within AWM makes adding this information relatively trivial.

Step 3 – Create the OLAP DML Calculation Program.

The new OLAP DML program, called TIME_VIEW_PRG (again feel free to change the name of program if you want), takes two arguments:

- The cube.measure_name for the base measure, in this case SALES_M1, which is the Revenue measure in the SALES cube.

- The identifier for the calculated measure within the REPORT_CUBE cube, which in this case is M1_PRG (this is explained in more detail in the next section)

DEFINE TIME_VIEW_PRG PROGRAM DECIMAL

argument T_MEASURE text " Full measure name including cube name

argument T_MEASURE_ID text " Measure name

variable T_FORM text " Name of the formula

variable T_TIME_VIEW text " Value of TIME_VIEW dimension

variable D_RETURN decimal " Return value

trap on ALLDONE noprint

T_TIME_VIEW = TIME_VIEW

T_FORM = joinchars('REPORT_CUBE_', T_MEASURE_ID)

switch T_TIME_VIEW

do

case 'VL1_1':

D_RETURN = &T_MEASURE

break

case 'VL1_2': "LY

D_RETURN = lag(&T_FORM(TIME_VIEW 'VL1_1'), TIMES_LAG_PRIOR_YEAR, TIMES, LEVELREL TIMES_LEVELREL)

break

case 'VL1_3': "LY%

D_RETURN = lagpct(&T_FORM(TIME_VIEW 'VL1_1'), TIMES_LAG_PRIOR_YEAR, TIMES, LEVELREL TIMES_LEVELREL)

break

case 'VL1_4': "PP

D_RETURN = lag(&T_FORM(TIME_VIEW 'VL1_1'), 1, TIMES, LEVELREL TIMES_LEVELREL)

break

case 'VL1_5': "PP%

D_RETURN = lagpct(&T_FORM(TIME_VIEW 'VL1_1'), 1, TIMES, LEVELREL TIMES_LEVELREL)

break

case 'VL1_6': "YTD

if TIMES_LEVELREL EQ 'YEAR'

then D_RETURN = &T_FORM(TIME_VIEW 'VL1_1')

else D_RETURN = cumsum(&T_FORM(TIME_VIEW 'VL1_1'), TIMES, TIMES_PARENT_YEAR)

break

case 'VL1_7': "YTD LY

if TIMES_LEVELREL EQ 'YEAR'

then D_RETURN = lag(&T_FORM(TIME_VIEW 'VL1_1'), TIMES_LAG_PRIOR_YEAR, TIMES, LEVELREL TIMES_LEVELREL)

else D_RETURN = cumsum(lag(&T_FORM(TIME_VIEW 'VL1_1'), TIMES_LAG_PRIOR_YEAR, TIMES, LEVELREL TIMES_LEVELREL), TIMES, TIMES_PARENT_YEAR)

break

case 'VL1_8': "YTD LY%

D_RETURN = (&T_FORM(TIME_VIEW 'VL1_6')-cumsum(lag(&T_FORM(TIME_VIEW 'VL1_1'), TIMES_LAG_PRIOR_YEAR, TIMES, LEVELREL TIMES_LEVELREL), TIMES, TIMES_PARENT_YEAR))/cumsum(lag(&T_FORM(TIME_VIEW 'VL1_1'), TIMES_LAG_PRIOR_YEAR, TIMES, LEVELREL TIMES_LEVELREL), TIMES, TIMES_PARENT_YEAR)

break

case 'VL1_9': "QTD

if TIMES_LEVELREL EQ 'YEAR'

then D_RETURN = NA

else if TIMES_LEVELREL EQ 'QUARTER'

then D_RETURN = &T_FORM(TIME_VIEW 'VL1_1')

else if TIMES_LEVELREL EQ 'MONTH'

then D_RETURN = cumsum(&T_FORM(TIME_VIEW 'VL1_1'), TIMES, TIMES_PARENT_QUARTER)

else if TIMES_LEVELREL EQ 'DAY'

then D_RETURN = cumsum(&T_FORM(TIME_VIEW 'VL1_1'), TIMES, TIMES_PARENT_QUARTER)

else D_RETURN = NA

break

case 'VL1_10': "QTD LY

if TIMES_LEVELREL EQ 'YEAR'

then D_RETURN = NA

else if TIMES_LEVELREL EQ 'QUARTER'

then D_RETURN = cumsum(lag(&T_FORM(TIME_VIEW 'VL1_1'), TIMES_LAG_PRIOR_YEAR, TIMES, LEVELREL TIMES_LEVELREL), TIMES, TIMES_PARENT_QUARTER)

else if TIMES_LEVELREL EQ 'MONTH'

then D_RETURN = cumsum(lag(&T_FORM(TIME_VIEW 'VL1_1'), TIMES_LAG_PRIOR_YEAR, TIMES, LEVELREL TIMES_LEVELREL), TIMES, TIMES_PARENT_QUARTER)

else if TIMES_LEVELREL EQ 'DAY'

then D_RETURN = cumsum(lag(&T_FORM(TIME_VIEW 'VL1_1'), TIMES_LAG_PRIOR_YEAR, TIMES, LEVELREL TIMES_LEVELREL), TIMES, TIMES_PARENT_QUARTER)

break

case 'VL1_11': "QTD LY%

if TIMES_LEVELREL EQ 'YEAR'

then D_RETURN = NA

else if TIMES_LEVELREL EQ 'QUARTER'

then D_RETURN = (&T_FORM(TIME_VIEW 'VL1_9')-cumsum(lag(&T_FORM(TIME_VIEW 'VL1_1'), TIMES_LAG_PRIOR_YEAR, TIMES, LEVELREL TIMES_LEVELREL), TIMES, TIMES_PARENT_QUARTER))/cumsum(lag(&T_FORM(TIME_VIEW 'VL1_1'), TIMES_LAG_PRIOR_YEAR, TIMES, LEVELREL TIMES_LEVELREL), TIMES, TIMES_PARENT_QUARTER)

else if TIMES_LEVELREL EQ 'MONTH'

then D_RETURN = (&T_FORM(TIME_VIEW 'VL1_9')-cumsum(lag(&T_FORM(TIME_VIEW 'VL1_1'), TIMES_LAG_PRIOR_YEAR, TIMES, LEVELREL TIMES_LEVELREL), TIMES, TIMES_PARENT_QUARTER))/cumsum(lag(&T_FORM(TIME_VIEW 'VL1_1'), TIMES_LAG_PRIOR_YEAR, TIMES, LEVELREL TIMES_LEVELREL), TIMES, TIMES_PARENT_QUARTER)

else if TIMES_LEVELREL EQ 'DAY'

then D_RETURN = (&T_FORM(TIME_VIEW 'VL1_9')-cumsum(lag(&T_FORM(TIME_VIEW 'VL1_1'), TIMES_LAG_PRIOR_YEAR, TIMES, LEVELREL TIMES_LEVELREL), TIMES, TIMES_PARENT_QUARTER))/cumsum(lag(&T_FORM(TIME_VIEW 'VL1_1'), TIMES_LAG_PRIOR_YEAR, TIMES, LEVELREL TIMES_LEVELREL), TIMES, TIMES_PARENT_QUARTER)

break

case 'VL1_12': "3MMA

if TIMES_LEVELREL eq 'MONTH'

then D_RETURN = MOVINGAVERAGE(&T_FORM(TIME_VIEW 'VL1_1'), -2, 0, 1, TIMES, LEVELREL TIMES_LEVELREL)

else D_RETURN = NA

break

case 'VL1_13': "6MMA

if TIMES_LEVELREL eq 'MONTH'

then D_RETURN = MOVINGAVERAGE(&T_FORM(TIME_VIEW 'VL1_1'), -5, 0, 1, TIMES, LEVELREL TIMES_LEVELREL)

else D_RETURN = NA

break

case 'VL1_14': "12MMA

if TIMES_LEVELREL eq 'MONTH'

then D_RETURN = MOVINGAVERAGE(&T_FORM(TIME_VIEW 'VL1_1'), -11, 0, 1, TIMES, LEVELREL TIMES_LEVELREL)

else D_RETURN = NA

break

case 'VL1_15': "Product Share of All Products

D_RETURN = &T_FORM(TIME_VIEW 'VL1_1')/&T_FORM(TIME_VIEW 'VL1_1', PRODUCTS limit(PRODUCTS to TOPANCESTORS using PRODUCTS_PARENTREL)

break

case 'VL1_16': "Product Share of Parent

D_RETURN = &T_FORM(TIME_VIEW 'VL1_1')/&T_FORM(TIME_VIEW 'VL1_1', PRODUCTS PRODUCTS_PARENTREL)

break

case 'VL1_17': "Customers Share of All Customers

D_RETURN = &T_FORM(TIME_VIEW 'VL1_1')/&T_FORM(TIME_VIEW 'VL1_1', CUSTOMERS limit(CUSTOMERS to TOPANCESTORS using CUSTOMERS_PARENTREL)

break

case 'VL1_18': "Customers Share of Parent

D_RETURN = &T_FORM(TIME_VIEW 'VL1_1')/&T_FORM(TIME_VIEW 'VL1_1', CUSTOMERS CUSTOMERS_PARENTREL)

break

case 'VL1_19': "Channels Share of All Channels

D_RETURN = &T_FORM(TIME_VIEW 'VL1_1')/&T_FORM(TIME_VIEW 'VL1_1', CHANNELS limit(CHANNELS to TOPANCESTORS using CHANNELS_PARENTREL)

break

case 'VL1_20': "Channels Share of Parent

D_RETURN = &T_FORM(TIME_VIEW 'VL1_1')/&T_FORM(TIME_VIEW 'VL1_1', CHANNELS CHANNELS_PARENTREL)

break

doend

ALLDONE:

return D_RETURN

END

In simple terms, the program checks to see which member of the dimension TIME_VIEW is being requested and then executes the correct OLAP function to return the required data. At the moment this has only been tested on a Julian calendar hierarchy but I am doing some more testing over the next few weeks for other types of hierarchies. Some of the measures are dependant on the level within the Time dimension, so you may need to change references to specific level names etc if you want to reuse this program code. For example:

if TIMES_LEVELREL EQ 'YEAR'

My time dimension has levels; Year, Quarter, Month, and Day. In some cases it is necessary to change the processing depending the level, for example:

if TIMES_LEVELREL EQ 'YEAR'

then D_RETURN = NA

else if TIMES_LEVELREL EQ 'QUARTER'

then D_RETURN = &T_FORM(TIME_VIEW 'VL1_1')

else if TIMES_LEVELREL EQ 'MONTH'

then D_RETURN = cumsum(&T_FORM(TIME_VIEW 'VL1_1'), TIMES, TIMES_PARENT_QUARTER)

else if TIMES_LEVELREL EQ 'DAY'

then D_RETURN = cumsum(&T_FORM(TIME_VIEW 'VL1_1'), TIMES, TIMES_PARENT_QUARTER)

else D_RETURN = NA

In this code extract you can see the reference to one of the additional time attributes we added in the previous step, PARENT_QUARTER that is used by the program. All the references within the program are based on Standard Form naming conventions. Therefore, the full standard name for this attribute is TIMES_PARENT_QUARTER since the dimension is called TIMES and the attribute is called PARENT_QUARTER.

For simplicity I created the program in the AW containing the cubes, which is not exactly ideal because if you delete the AW to rebuild it you will lose the program code. Alternatively, you can just create a new AW and add the program to the new AW then modify the ONTTACH program in your data AW to automatically attach the program AW.

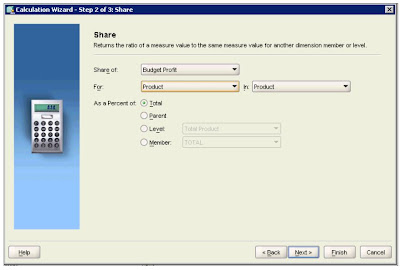

Calculations for dimension members VL_15 to VL_20 are provided as examples and would need to be changed to match the dimensions within your own AWs. For each dimension you will need to create two new dimension members within the TIME_VIEW dimension to return the following share calculations:

- Share of based on the top level total, i.e. All Members

- Share based on parent.

case 'VL1_19': "Channels Share of All Channels

D_RETURN = &T_FORM(TIME_VIEW 'VL1_1')/&T_FORM(TIME_VIEW 'VL1_1', CHANNELS limit(CHANNELS to TOPANCESTORS using CHANNELS_PARENTREL)

break

case 'VL1_20': "Channels Share of Parent

D_RETURN = &T_FORM(TIME_VIEW 'VL1_1')/&T_FORM(TIME_VIEW 'VL1_1', CHANNELS CHANNELS_PARENTREL)

break

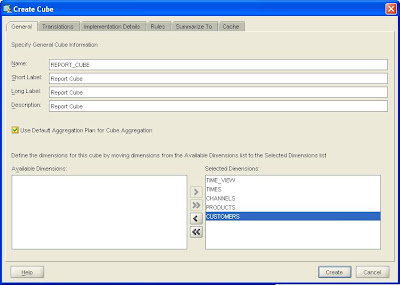

Step 4 – Creating the New Cube.

In my demo schema I have a cube called SALES and it contains two measures: Revenue (called M1) and Quantity (called M2). The dimensionality for this cube is Time, Channel Product, and Customer.

To provide all the time series and comparative calculations I create a new cube that has the same dimensionality as the SALES cube, with one additional dimension. In this cube the Time View dimension is also used. This reporting cube contains no stored measures, but it does contain custom calculated measures for each measure in the SALES cube, however, each calculated measure will return 14 additional time calculations and 6 share calculations to support the base measure controlled by the dimension TIME_VIEW.

The implementation for the cube is largely irrelevant since no stored measures will be present within the cube, only calculated measures. Therefore, you can if you want either accept the default settings or de-select all the various options.

To add the calculated measures to the cube requires the use of a custom measure XML template. To create a calculated measure to support the measure “Revenue” from the SALES cube you will need to create a custom calculation either using an XML template (email me I can send you a blank template) or use the Excel Calculation Utility that can be downloaded from the OLAP Home Page on OTN to install the two custom calculations.

Once you have added these calculated measures to the REPORT_CUBE, the tree in AWM should look like this:

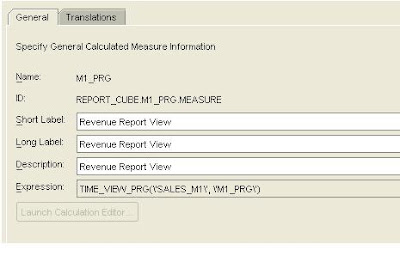

By selecting each of the calculated measures, the details of each calculation will be displayed within the right-hand panel of AWM. For the first calculated measure linked the Revenue measure in the Sales cube the panel looks like this:

For the second calculated measure linked the Quantity measure in the Sales cube the panel looks like this:

The key part is the line showing the “Expression” (in the XML Template this is the attribute ExpressionText), this defines the call to an OLAP DML program called TIME_VIEW_PRG passing the two required arguments of

ExpressionText="TIME_VIEW_PRG('SALES_M1', 'M1_PRG')”

- The cube.measure_name for the base measure, in this case SALES_M1, which is the Revenue measure in the SALES cube.

- The identifier for the calculated measure within the REPORT_CUBE cube.

- CNS REPORT_CUBE_M1_PRG

- EQ TIME_VIEW_PRG('SALES_M1', 'M1_PRG')

- UPDATE

- COMMIT

- CNS REPORT_CUBE_M2_PRG

- EQ TIME_VIEW_PRG('SALES_M2', 'M2_PRG')

- UPDATE

- COMMIT

If want to do this via the PL/SQL interface then you can do something like this:

EXEC DBMS_AW.EXECUTE('AW ATTACH AW_NAME RW FIRST')

EXEC DBMS_AW.EXECUTE('CNS REPORT_CUBE_M1_PRG')

EXEC DBMS_AW.EXECUTE('EQ TIME_VIEW_PRG(''SALES_M1'', ''M1_PRG'')')

EXEC DBMS_AW.EXECUTE('UPDATE')

EXEC DBMS_AW.EXECUTE('COMMIT')

EXEC DBMS_AW.EXECUTE('CNS REPORT_CUBE_M2_PRG')

EXEC DBMS_AW.EXECUTE('EQ TIME_VIEW_PRG(''SALES_M2'', ''M2_PRG'')')

EXEC DBMS_AW.EXECUTE('UPDATE')

EXEC DBMS_AW.EXECUTE('COMMIT')

EXEC DBMS_AW.EXECUTE('AW DETACH AW_NAME')

Step 4a – Viewing the Data.

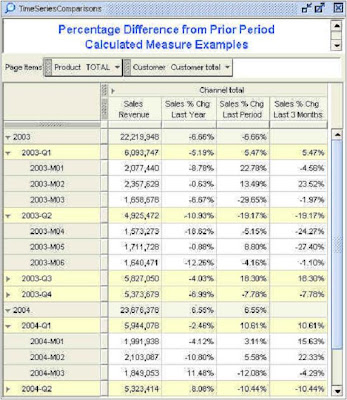

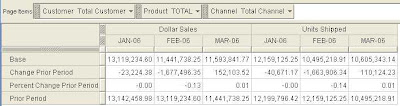

Once the calculated measures have been added to the new cube, REPORT_CUBE, the AWM Data Viewer can be launched to check the results:

In the Query Wizard both sets of measures (stored measures and the new calculated measures) are both available for selection. To see the new calculations that are now available we can select both of the Report View measures as shown here.

Once the measures have been selected, the dimension selector will allow us to pick the calculations we want to display from the list of twenty (there are fourteen base calculations that can be applied to any model, plus the additional share calculations that are based on the dimensions within the source cube, in this case six additional share calculations, making twenty calculated measures in total).

The Dimension Viewer shows all the available calculations for this demo schema and the final report is shown below.

Step 5 – Making the Data Available via SQL.

Is there any real point to this post except to show how clever Oracle OLAP can be? In opinion yes, since this technique can be extremely useful when you need to make an OLAP cube visible to SQL based tools. Analytic Workspace Manager 10g has an relational view generator that is available as a plugin and this makes generating the SQL views to support your OLAP cubes a very quick and easy process. But there we know users don’t just want base measures they want lots and lots of calculations as well. When the time comes to create SQL views as the limit on the number of columns within a view is 1000. This may have been increased in 10g/11g, but even so, navigating a cube with that many columns is not easy.

The OLAP View Generator plugin for AWM10gR2 can be downloaded from here, and the associated readme is here.

Using this model, the calculations simply resolve to another dimension, which translates to one additional column in the view as opposed to, at least, 14 additional columns per source measure and in this case 20 additional columns per source measure. Therefore, this approach makes exposing the cube much easier to manage as can be seen here:

As we can see here, all the calculations are contained within the dimension Time View, which simply gets exposed like any other dimension.

Eh voila, install one measure and you can automatically generate fourteen or more additional measures which are guaranteed to bring a smile to the face of any business user.