http://wiki.oracle.com/page/Oracle+Olap+Option

- Terminology - Key Concepts and Terms

- Oracle OLAP How To - Performance Tuning and more ...

- Script Samples - for DBAs managing the OLAP option.

- Did You Know? - that in the Oracle OLAP option you can.

- Diagnostic Techniques - for those using the OLAP option.

- RAC & GRID - for those using OLAP option with RAC or GRID

Most people when they approach OLAP for the first time, create a data model that either takes too long to build or too long to query. The “too long to query” is usually the first problem to arise and in trying to solve this issue they create the second problem “too long to build”. There is a balance that needs to be achieved when designing OLAP data models. That balance is between pre-solving every level across all dimensions, which increases build time, and providing users with fast query performance. Most people assume there is a direct relationship between the number of levels that are pre-solved and query performance. As one goes up so does the other: pre-solve more levels and query performance improves. Therefore, the answer to poor query performance is to pre-solve all levels across all dimensions correct? Yes and no. Most systems do not have an infinite window for building cubes. Fortunately, using Oracle OLAP Option it is possible to balance the amount of time taken to build a cube and still ensure excellent query performance. How is this achieved?

Oracle OLAP is the most powerful and scalable OLAP server on the market. Because OLAP is inside the database it inherits all the native scalability, security and performance of the Oracle database and it is because the database is so fast and scalable there is a tendency to ignore certain design principles when building an OLAP data model. If the original design and methodology is sound then tuning is very quick and easy to manage. But there is no silver bullet to make OLAP go faster, as one of our OLAP gurus states: there is no ”_OLAP_TURBO_MODE=YES” setting for the init.ora.

What follows is a series of recommendations and observations based on my experience on various OLAP projects to help optimize OLAP builds. This is not the authoritative guide to tuning OLAP data models; just my thoughts.

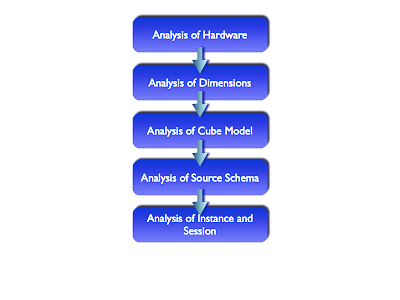

When asked to tune an existing OLAP data model I break the work up in to five sections:

Tuning a data load process needs to be done in a step-by-step process. Trying to rush things and changing too many settings at once can simply create more problems than it solves. It is also important to start at the beginning with the hardware and lastly look at the database instance itself. Most DBAs will be tempted to rip open the init.ora file and start tweaking parameters in the hope of making the build run faster.

However, the area that is likely to have the biggest impact is refining (or possibly even changing) the implementation of the logical model. But when making changes that improve the build performance you should also check the impact on query performance to ensure the amount of time taken to return a query is still within acceptable limits.

Below are the steps I use when I am asked to analyse the build performance on an OLAP schema. But before you start a tuning exercise, I would recommend reading the 2-Day Performance Tuning Guide that is now part of the database documentation suite. It provides a lot of useful information. It is available as an HTML document and PDF document. The PDF document can be downloaded and stored on your laptop/memory stick etc for easy reference.

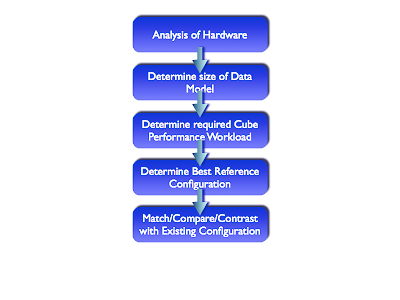

Part 1 - Analysis of Hardware

In any situation the first challenge in a tuning exercise is to ensure the foundation for the whole solution is solid. This tends to be the biggest challenge because it can involve a working with a number of hardware and software vendors. Trying to make sure your environment is based on an adequate configuration can be time consuming and risky, and will probably end in a compromise between performance, scalability, manageability, reliability, and naturally price.

Configurations can be difficult to analyse and most of the time. This analysis typically tends to degenerate into each vendor in the hardware stack blaming the other vendor and/or the database.

Step 1 – Check Patches

When analysing an existing environment make sure all the latest firmware, drivers and O/S patches have been applied. Refer to the Oracle database installation guide, Metalink, and the hardware vendors web sites for more details.

Step 2 – Determine Workload

In a good environment you should be expecting to load about 1 million rows per minute via OLAP. This is the benchmark. Check the XML_LOAD_LOG table from previous builds to determine if this is being achieved. Here is a log from a data load for the Common Schema AW based on a relatively simple view that joins two fact tables together to load three measures. Approximately 900,000 records are loaded in 57 seconds.

In this case, we could conclude this is a reasonable starting point to begin the next phase of the tuning exercise. However, don’t forget the performance initially listed in XML_LOAD_LOG could be influenced by a number of factors, but if the data source is a table or a very simple view, then 1 million rows a minute should be achievable. Anything less tends to indicate some sort of I/O issue, or possibly the use of inefficient SQL to extract data from the source. The ADDM analysis of I/O performance partially depends on a single argument, DBIO_EXPECTED, that describes the expected performance of the I/O subsystem. The value of DBIO_EXPECTED is the average time it takes to read a single database block in microseconds. Oracle uses the default value of 10 milliseconds, which is an appropriate value for most modern hard drives. If your hardware is significantly different, such as very old hardware or very fast RAM disks, consider using a different value. To determine the correct setting for DBIO_EXPECTED parameter, perform the following steps:

- Measure the average read time of a single database block read for your hardware. Note that this measurement is for random I/O, which includes seek time if you use standard hard drives. Typical values for hard drives are between 5000 and 20000 microseconds.

- Set the value one time for all subsequent ADDM executions. For example, if the measured value if 8000 microseconds, you should execute the following command as SYS user:

- EXECUTE DBMS_ADVISOR.SET_DEFAULT_TASK_PARAMETER( 'ADDM', 'DBIO_EXPECTED', 8000);

Parallel vs Serial Processing

As part of this step some consideration needs to given to parallel vs serial processing. I find most people will start by running a build in serial mode and then assume if it takes X amount of time to process in serial mode, running in parallel mode will naturally take X/No of parallel jobs. Of course this is true up to a point. There is a definite tipping point in parallel processing where the law of diminishing returns sets in very quickly. As a starting point if I am going to process a load in parallel I will start by using a job queue = “No. of CPUs-1”. This is usually a good starting point and depending on where the bottlenecks start appearing (CPU waits vs I/O waits) I may increase or decrease this figure during testing.

Parallel processing is a very useful tool for improving performance but you need to use partitioned cubes and the data being load must map across multiple partition keys to result in parallel processing. As before, this is not a silver bullet that will simply make everything run faster. It needs to be used carefully.

Step 3 – Determine Best Reference Configurations

What can be useful is to work from a set of known configurations designed to provide a stated level of performance. Oracle has worked with a number of hardware vendors to provide documented configurations for data warehouse solutions. These configurations can be used as benchmarks and/or recommendations for your environment.

- Reference Configurations for Dell and EMC

- Reference Configurations for HP

- Reference Configurations for IBM

- Reference Configurations for Sun

One of the key questions for the performance tuning exercise is: Are you just tuning the model based on today’s data volumes or should the exercise look to maximise performance of future load volumes. This is a very tricky area to manage and difficult to plan and test. Which is why having a referenceable configuration is so important. Why? Because the reference configurations provide a clear upgrade path and levels of performance are certified along that upgrade path.

Step 4 – Match/Compare/Contrast with Existing Configurations

In reality altering your hardware configuration is going to be a given. To change a configuration is likely to be a costly and time-consuming exercise. However, it should not be ignored. If you have followed and tuned your data model based on the following recommendations and the load and aggregation phase is still too long then a full hardware review may in fact be needed and upgrades may need to be purchased. Hopefully, the next result of this whole exercise is to provide some sort of cost/benefit report to outline expected performance improvements based on additional hardware costs.

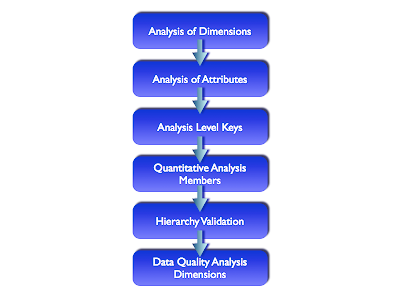

Part 2 - Analysis of Dimensions

The second stage is to review the logical model for the dimensions. This stage is largely to confirm the dimensions are correctly implemented and the source data is of good quality. Most of this analysis is really just making sure there are no big issues within the various dimensions and possibly making small changes based on experience from various projects. But it is important to ensure the dimensions are of “good quality” before moving on to review the cubes – there is no point building a house on a sand bank and then wondering why it gets washed away (if that makes sense?). Good foundations are needed.

Step 1 - Analysis of Attributes

Many customers implement dimensions when they only really need attributes. This usually happens when they are migrating from a legacy OLAP server to Oracle OLAP. We have a customer at the moment that has an existing OLAP data model in a legacy OLAP server based on 60 dimensions. Reviewing the queries the users make against the data model it became clear that many of these dimensions were in fact simple attributes within Oracle OLAP. This can have a significant impact on the design of related cubes and the whole loading process, since fewer dimensions within a cube will improve both load and aggregation times.

Can Oracle OLAP support extremely large dimensional models? Yes it can. The engine will support up to 256 dimensions within a single cube and within the AW you can have as many dimensions as you need. The key point here is: each cube can have its own dimensionality. Oracle OLAP does not implement hyper-cubes where every cube has to share the same dimensionality – one of the key benefits of Oracle OLAP over other legacy OLAP engines is that it does support cubes of different dimensionality.

There is an excellent customer case study in the December 07 OLAP Newsletter that examines how one customer managed a very large data models based on lots of dimensions. The E.ON data model contains multiple cubes (subject areas) each with between 6 to 12 dimensions. The cubes are updated weekly with many millions of rows loaded and aggregated, with about 1 million rows updated in the cube per minute. For more information, read the complete review by clicking here. There are many other customers with even bigger models.

From a UI perspective you need to think very carefully about the number of dimensions within a cube. Many UI studies have shown business users find it increasingly difficult to interpret the results from a dataset where there are more than nine dimensions. Although, as the above case shows, it is possible for some users to interact with larger more complex models providing the information is presented in usable format, it is worth spending some time clarifying the exact dimensionality of each cube.

If you think about a typical crosstab layout, a nine dimensional cube results in one row edge dimension, one column edge dimension and seven page edge dimensions plus the measure dimension. That is a huge amount of information to absorb and, in my opinion, makes constructing queries very difficult. Another, issue that frequently occurs, as the number of dimensions increases, is the game of “hunt-the-data”. Even with only nine dimensions in a cube it is likely the data set will be extremely sparse and drilling down only one or two levels across a couple of dimensions can result in crosstabs with little or no data. Some BI tools try to mask this problem by providing an NA and/or Zero row filters. The net result is usually a “no rows returned” message appearing in the body of the report at regular intervals.

My main recommendation is: Check the number of dimensions in your model and for the sake of your users and try to keep the number within each cube down to something intelligible. For example approximately nine – this is not a hard and fast rule; just a recommendation but do read the E.ON case study as well. If you are presented with a data model, do not be afraid to challenge the dimensionality of the cubes within the model. Make sure all the dimensions within a cube are really required because I can guarantee some are simply basic attributes.

Step 2 - Analysis of Level Keys

There is not much that needs to be done here except to make sure to use surrogate keys except when you are certain the dimension keys are unique across all levels. This is not always the case and using a surrogate key is a good way to ensure your hierarchies are correctly populated. OLAP creates a surrogate key by prefixing the original source key with the level identifier. Therefore, from a storage perspective it makes sense to make the level key as short as possible. For example, don’t create a level identifier such as “PRODUCT_SUB_CATEGORY_SKU_IDENTIFIER”. There is a limit of 30 characters for level names. In practice I have seen issues with both data loading and aggregation where very large dimension keys (i.e. greater than 400 characters) have been created.

In practice I recommend using simple level identifiers such as L1, L2, L3 although this does make writing SQL statements a little more challenging via the SQL Views as the level identifier is used in the column name along with the dimension and it is not exactly obvious what each column contains when they are called PODUCT_L1, PRODUCT_L2 etc.

Surrogate vs Natural Keys

The use of surrogate keys is an interesting area. During some projects it has been found that by not using surrogate keys build performance has increased. This does make sense since the source data for cube will have to be reformatted at load time to ensure the key is valid. In some cases the amount of time required to manipulate the incoming key values may be minimal. In other cases it has had a significant impact on load performance – the “1 million rows a minute” benchmark was not achieved and reverting to natural keys did improve load times. If the data source can be guaranteed to provide unique keys across all levels it is probably worth switching to natural keys. But be warned – you cannot switch between using surrogate and natural keys if the cube already contains data.

Step 3 - Quantitative Analysis of Members

This is an important step as it will allow us to determine which levels to pre-aggregate within the cube. In most cases the default skip level approach to pre-solving levels within a cube is a reasonable starting point. But it is possible to design a much better model by analysing the number of members at each level and the average number of children for each level.

Lets look at two real customer examples:

Dimension 1

A change as simple as this could have a huge impact on the amount of time taken to aggregate a cube.

However, in some cases the OLAP Compression feature can be useful in terms of allowing you to pre-compute additional lower levels within a hierarchy for little or no additional cost because the sparsity of the data allows higher levels to compressed out of the cube. If you have a situation where there is almost a 1:1 relationship between a level and the next level down in the hierarchy it would make sense to pre-compute that level since the compression feature will compress out the redundant data. For example:

Dimension 2

In this example the hierarchy is relatively flat and the number of children returned at each level varies quite a lot. But at the lowest levels, there is likely to be a large number of instances where a parent only has a single child and in these situations the compress feature can compress out the repeated values. Therefore, it might make sense to solve levels L5 and L4.

Dimension 3

In this example the hierarchy here shows the normal pyramid approach and is definitely bottom heavy. But the upper levels contain relatively few members and drilling typically returns very few members. The default skip level approach for this dimension may in fact be pre-solving too many levels. In practice it may take 2 or 3 builds to determine which are the best levels to pre-solve, with a good starting point being:

- Run 1: L7, L5, L2

- Run 2: L7, L6, L1

- Run 3: L7, L4, L2

Step 4a - Hierarchy Validation

Always, always check your hierarchies are functioning correctly. This involves using the Data Viewer feature within AWM. You should make sure the dimension is drillable and that selecting each level in turn returns the correct result-set.

A better approach is actually to make the database do the work, but this requires some additional SQL commands to be executed against the source tables. Ideally, try and create a relational dimension over the source table(s). Normally, the relational dimension object is used within query rewrite, which in this case we are not really concerned with for 10gR2 (in 11g the story is quite different as a cube can be registered as a materialised view and used for query-rewrite). But this does allow us to use the dbms_dimension.validate_dimension procedure verifies that the relationships specified in a dimension are valid. The rowid for any row that is found to be invalid will be stored in the table DIMENSION_EXCEPTIONS in the user's schema. The procedure looks like this:

DBMS_DIMENSION.VALIDATE_DIMENSION (

dimension IN VARCHAR2,

incremental IN BOOLEAN := TRUE,

check_nulls IN BOOLEAN := FALSE,

statement_id IN VARCHAR2 := NULL );

Note that before running the VALIDATE_DIMENSION procedure, you need to create a local table, DIMENSION_EXCEPTIONS, by running the provided script utldim.sql. If the VALIDATE_DIMENSION procedure encounters any errors, they are placed in this table. Querying this table will identify the exceptions that were found. To query this table you can use a simple SQL statement such as this:

SELECT * FROM dimension_exceptions

WHERE statement_id = 'Product Validation';

However, rather than query this table, it may be better to query the rowid of the invalid row to retrieve the actual row that has generated the errors. In this example, the dimension PRODUCTS is checking a table called DIM_PRODUCTS. To find any rows responsible for the errors simply link back to the source table using the rowid column to extract the row(s) causing the problem, as in the following:

SELECT * FROM DIM_PRODUCTS

WHERE rowid IN (SELECT bad_rowid

FROM dimension_exceptions

WHERE statement_id = 'Product Validation');

Step 4b - Hierarchy Order

The order of hierarchies within a dimension can have a significant impact on query performance. When solving levels at run-time the OLAP engine will use the last hierarchy in the list as the aggregation path. Consider this example using a time dimension:

Let’s assume we pre-compute the levels Month and Quarter. But decide not to pre-compute the Year level because the main hierarchy used during queries is the Julian Year-Quarter-Month-Day and, therefore, the total for each Year will be derived from adding up just 4 values. In fact, the aggregation engine looks at all the hierarchies to find the lowest common level across all hierarchies, which in this case is Day. It then selects the last hierarchy in the list containing the level Day, in this case the Week hierarchy. Therefore, the value for the each dimension member at the Year level will be the result of adding up 365/366 values and not simply 4 Quarter values.

The obvious question is why? The answer is to ensure backward compatibility with the Express ROLLUP command from which the AGGREGATE command is derived. When the Aggregate command was introduced one of our requirements was that it produced numbers that matched those of Rollup, thus in cases where an aggregate node was declared in multiple hierarchies we always produced numbers based on the LAST definition of the node because that would be the number that matched the procedural approach taken by rollup. Because of this feature an alternative approach to hierarchy ordering might be as follows:

Now the run-time aggregation for Year will be derived from the level Quarter, which has been pre-computed, and the result will be returned much faster.

Step 5 - Check the Data Quality

This last step is probably the most important, especially as OLAP style projects tend to be scheduled once all the ETL has been completed. But you should never take the quality of the source data for granted. Ideally you can use the Data Quality option of Warehouse Builder (which is a costed option for OWB) and analyse the source data for each dimension to make sure the data is of a reasonable quality. Things to check are:

- Consistent data type

- Number distinct values

- Min and Max values

- Domain members

- Number of members not present in the fact table

The last one is an interesting check especially if you are using that dimension as a partition key. If you are creating lots and lots of empty partitions that will never contain data then should those members even be loaded? In a recent project I identified a dimension that contained over 300,000 leaf node members, but in the main fact table there was only data for 50% of those members. The obvious question is why load 150,000 plus members if you are never going to post data to them.

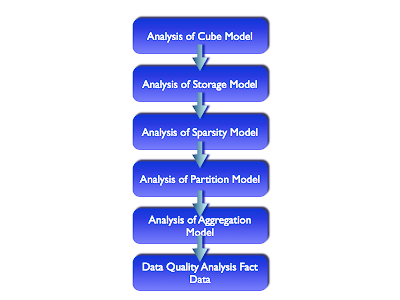

Part 3 - Analysis of Cubes

The next stage is to review the data model for each cube in turn. It is in this area the biggest impacts on load time are likely to be achieved.

Step 1 - Analysis of Storage Model

It is important to assign an efficient storage model to a cube, as this will have a significant impact on both the load and aggregation times.

- Make sure compression is enabled.

- Data type should be either DECIMAL or INTGER

- Warning do not use NUMBER as this uses approximately 3.5 times the storage compared to DECIMAL, but it is the default. Number requires 22 bytes and Decimal requires 8 bytes (See OLAP Application Developers Guider, 10.2.0.3, Chapter 7 Aggregating Data).

Step 2 - Analysis of Sparsity Model

Management of sparsity within a cube is critical. Firstly the order of the dimensions is very important. When using compression, which should always be enabled, dimensions should be ordered with the dimension with the least number of members first and the dimension with the most number of members last. The most common question is: Should time be dense or sparse?

Answer – it depends. This is where you need to have a deep understanding of the source data and the data quality features in OWB can really help in this type of situation. In some models time works best dense and in other models time works best when it is sparse. This is especially true when time is used as the partition dimension. Therefore, you need to plan for testing these different scenarios.

There is sparsity advisor package in the database, which analyses the source data in relational tables and recommends a storage method. The recommendations may include the definition of a composite and partitioning of the data variable. The Sparsity Advisor consists of these procedures and functions:

- SPARSITY_ADVICE_TABLE Procedure

- ADD_DIMENSION_SOURCE Procedure

- ADVISE_SPARSITY Procedure

- ADVISE_DIMENSIONALITY Function

- ADVISE_DIMENSIONALITY Procedure

- Call SPARSITY_ADVICE_TABLE to create a table for storing the evaluation of the Sparsity Advisor.

- Call ADD_DIMENSION_SOURCE for each dimension related by one or more columns to the fact table being evaluated. The information that you provide about these dimensions is stored in a DBMS_AW$_DIMENSION_SOURCES_T variable.

- Call ADVISE_SPARSITY to evaluate the fact table. Its recommendations are stored in the table created by SPARSITY_ADVICE_TABLE. You can use these recommendations to make your own judgements about defining variables in your analytic workspace, or you can continue with the following step.

- Call the ADVISE_DIMENSIONALITY procedure to get the OLAP DML object definitions for the recommended composite, partitioning, and variable definitions, or

- Use the ADVISE_DIMENSIONALITY function to get the OLAP DML object definition for the recommended composite and the dimension order for the variable definitions for a specific partition.

The OLAP Reference manual provides an example script for the GLOBAL demo schema to analyse the relational fact table. The amount of information required does seem a little excessive given that most of it could be extracted from the various metadata layers – may be some bright person will create a wrapper around this to simplify the whole process.

On the whole I still find the majority of models work best with everything sparse and to far I have only found a few cases where load and aggregation times improved when time was marked dense. But as with all tuning exercises, it is always worth trying different options, as there is no “fits-all” tuning solution with OLAP.

Step 3 - Analysis of Partition Model

Partitioning is managed at both the logical and physical levels. At the logical level, it is possible to partition a cube using a specific level to split the cube into multiple chunks. At the physical level, it is possible to partition the actual AW$ table and associated indexes that form the AW.

Logical Partitioning

Always start by use partitioning. Why? Because partitioning allows the cube to be broken down into smaller segments – much like relational table partitioning. This can help improve the aggregation phase of a build because the engine is able to load more related data into memory during processing. It also allows you to use the parallel update features of Oracle OLAP during a build. But there are some things to consider when setting up partitioning. When using partitioning you should:

- Try to select a dimension that has balanced partitions, such as Time

- Try to select a dimension level that is not too volatile, this is one of the reasons for electing to use a dimension such as time.

- Select the Level based on the information collected during Step 3 of the analysis of dimensions. In 10g, the levels above the partition key are solved at run time (this is resolved in 11g) so select the level for the partition key carefully.

- When selecting the partition key consider the impact this will have on the default partition, which contains all the levels above the partition level. For example partitioning on a level such as Day might generate nice small partitions but the default partition will contain all the other members such as Week, Month, Quarter and Year making the default partition very large.

Parallel vs Serial Processing

Logical partitioning is required for cubes where you want to enable parallel processing. But be warned, running a job in parallel may not improve processing times. In fact using too many parallel processes can have the opposite affect. But used wisely, parallel processing can drastically improve processing times provided the server is not already CPU bound. As a starting point I always begin testing by setting the value MaxJobQueues to “No. of CPUs-1” in the XML file for the definition of the build. In some cases even this might be too high and reducing this figure can actually improve processing times. Tuning AW parallel processing is exactly the same as tuning relational parallel processing – you need to determine where the point of diminishing returns sets in, which can be a combination:

CPU loading

I/O bandwidth

Cube design

Do not assume throwing parallel resources at a performance issue will resolve the whole problem. Managed carefully this can provide a significant improvement in peformance.

Physical Partitioning

The aim of relational (physical) partitioning is to allow you to control the tablespace for each partition thus distributing the load across multiple disks, to spread data across a variety of disk types (see information on ILM on OTN) and to enhance query performance since it is possible to direct specific queries to a smaller subset of data.

Some, but not all of this applies to an AW. From a tablespace perspective it is probably easier to use ASM to manage and distribute the storage of an AW across multiple disks as opposed to creating a partitioned AW$ table spread across multiple tablespaces. The reason the AW$ table is partitioned is optimise the lob performance. Each partition has its own lob index, which manages its storage, and a separate slave process can update each partition.

The relational table that acts as a container for the AW, AW$xxxxx , can be partitioned to break the AW into more physical chunks which can reduce contention for locks on the relational objects (AW$ table and related indexes) during parallel data loading jobs. By default each AW is created using a range partition key of gen# and 8 subpartitions. The DDL below is from a default AW created via AWM. Note the clauses to manage the partition and sub-partitions:

- PARTITION BY RANGE ("GEN#")

- SUBPARTITION BY HASH ("PS#","EXTNUM")

- SUBPARTITIONS 8

CREATE TABLE "BI_OLAP"."AW$SH_AW"

("PS#" NUMBER(10,0),

"GEN#" NUMBER(10,0),

"EXTNUM" NUMBER(8,0),

"AWLOB" BLOB,

"OBJNAME" VARCHAR2(256 BYTE),

"PARTNAME" VARCHAR2(256 BYTE))

PCTFREE 10 PCTUSED 40 INITRANS 4 MAXTRANS 255

STORAGE(

BUFFER_POOL DEFAULT)

TABLESPACE "BI_OLAP"

LOB ("AWLOB") STORE AS (

DISABLE STORAGE IN ROW CHUNK 8192 PCTVERSION 0

CACHE

STORAGE(

BUFFER_POOL DEFAULT))

PARTITION BY RANGE ("GEN#")

SUBPARTITION BY HASH ("PS#","EXTNUM")

SUBPARTITIONS 8

(PARTITION "PTN1" VALUES LESS THAN (1)

PCTFREE 10 PCTUSED 40 INITRANS 4 MAXTRANS 255

STORAGE(

BUFFER_POOL DEFAULT)

TABLESPACE "BI_OLAP"

LOB ("AWLOB") STORE AS (

DISABLE STORAGE IN ROW CHUNK 8192 PCTVERSION 0

CACHE READS LOGGING

STORAGE(

BUFFER_POOL DEFAULT))

( SUBPARTITION "SYS_SUBP16109"

LOB ("AWLOB") STORE AS (

TABLESPACE "BI_OLAP" )

TABLESPACE "BI_OLAP",

SUBPARTITION "SYS_SUBP16110"

LOB ("AWLOB") STORE AS (

TABLESPACE "BI_OLAP" )

TABLESPACE "BI_OLAP",

SUBPARTITION "SYS_SUBP16111"

LOB ("AWLOB") STORE AS (

TABLESPACE "BI_OLAP" )

TABLESPACE "BI_OLAP",

SUBPARTITION "SYS_SUBP16112"

LOB ("AWLOB") STORE AS (

TABLESPACE "BI_OLAP" )

TABLESPACE "BI_OLAP",

SUBPARTITION "SYS_SUBP16113"

LOB ("AWLOB") STORE AS (

TABLESPACE "BI_OLAP" )

TABLESPACE "BI_OLAP",

SUBPARTITION "SYS_SUBP16114"

LOB ("AWLOB") STORE AS (

TABLESPACE "BI_OLAP" )

TABLESPACE "BI_OLAP",

SUBPARTITION "SYS_SUBP16115"

LOB ("AWLOB") STORE AS (

TABLESPACE "BI_OLAP" )

TABLESPACE "BI_OLAP",

SUBPARTITION "SYS_SUBP16116"

LOB ("AWLOB") STORE AS (

TABLESPACE "BI_OLAP" )

TABLESPACE "BI_OLAP") ,

PARTITION "PTNN" VALUES LESS THAN (MAXVALUE)

PCTFREE 10 PCTUSED 40 INITRANS 4 MAXTRANS 255

STORAGE(

BUFFER_POOL DEFAULT)

TABLESPACE "BI_OLAP"

LOB ("AWLOB") STORE AS (

DISABLE STORAGE IN ROW CHUNK 8192 PCTVERSION 0

CACHE

STORAGE(

BUFFER_POOL DEFAULT))

( SUBPARTITION "SYS_SUBP16117"

LOB ("AWLOB") STORE AS (

TABLESPACE "BI_OLAP" )

TABLESPACE "BI_OLAP",

SUBPARTITION "SYS_SUBP16118"

LOB ("AWLOB") STORE AS (

TABLESPACE "BI_OLAP" )

TABLESPACE "BI_OLAP",

SUBPARTITION "SYS_SUBP16119"

LOB ("AWLOB") STORE AS (

TABLESPACE "BI_OLAP" )

TABLESPACE "BI_OLAP",

SUBPARTITION "SYS_SUBP16120"

LOB ("AWLOB") STORE AS (

TABLESPACE "BI_OLAP" )

TABLESPACE "BI_OLAP",

SUBPARTITION "SYS_SUBP16121"

LOB ("AWLOB") STORE AS (

TABLESPACE "BI_OLAP" )

TABLESPACE "BI_OLAP",

SUBPARTITION "SYS_SUBP16122"

LOB ("AWLOB") STORE AS (

TABLESPACE "BI_OLAP" )

TABLESPACE "BI_OLAP",

SUBPARTITION "SYS_SUBP16123"

LOB ("AWLOB") STORE AS (

TABLESPACE "BI_OLAP" )

TABLESPACE "BI_OLAP",

SUBPARTITION "SYS_SUBP16124"

LOB ("AWLOB") STORE AS (

TABLESPACE "BI_OLAP" )

TABLESPACE "BI_OLAP") ) ;

The best overall approach here is to ensure you have the correct number of sub-partitions to reduce contention during updates. For example, if you have a cube with three years of data partitioned using the level month, it would be sensible to add and additional 36 subpartitions to the AW$ table to spread the load and reduce contention during parallel updates. You can add more sub-partitions quickly and easily as follows.

alter table aw$test modify partition ptnn add subpartition ptnn_009 update indexes;

alter table aw$test modify partition ptnn add subpartition ptnn_010 update indexes;

Therefore, I recommend adding additional subpartitions at the physical level to match the number of logical partitions within the cube.

It is possible to go to the next level (if you really feel it is necessary) and directly manage the DDL used to create the AW and there are a number of commands that allow you to control the default tablespace and the number of partitions. You can increase the number of sub-partitions within each gen# partition using either the ‘aw create command’ as shown here:

exec dbms_aw.execute('aw create owner

Note the key word “partitions” actually refers to the number of subpartitions.

It is in fact possible to define a target tablespace for the AW via the DBMS_AW.ATTACH procedure:

DBMS_AW.AW_ATTACH (

awname IN VARCHAR2,

forwrite IN BOOLEAN DEFAULT FALSE,

createaw IN BOOLEAN DEFAULT FALSE,

attargs IN VARCHAR2 DEFAULT NULL,

tablespace IN VARCHAR2 DEFAULT NULL);

For example, the following SQL statement creates the AW GLOBAL_PROGRAMS as the last user-owned analytic workspace in tablespace USERS:

SQL>EXECUTE DBMS_AW.AW_ATTACH('global_programs', true, true, 'last', ‘USERS’);

AWM 10gR2 (10.2.0.03A) also allows you to define the tablespace when you create the AW, but the tablespace name is not included in the XML definition of the AW.

If you think you need to get right down to the base DDL level to control the allocation of tablespaces used by the AW then you will need to manually define the AW$ table. The easiest method is to create another AW$ table using the DDL from the original AW$ table and modifying it to create your own placement statements for the tablespaces. To get the DDL for an AW (table and index) you can either use SQLDeveloper or use the DBMS_METDATA package as follows:

set heading off;

set echo off;

set pages 999;

set long 90000;

spool aw_ddl.sql

select dbms_metadata.get_ddl('TABLE','AW$SH_AW','SH_OLAP') from dual;

select dbms_metadata.get_ddl('INDEX','SH_AW_I$','SH_OLAP') from dual;

spool off;

These statements show the exact DDL used to generate the AW$ table and its associated index. Once you have the DDL you can then modify the tablespace statements for each sub-partition to spread the loading across different tablespaces and hence data files. But it is much easier to use ASM to manage all this for you.

There is one major issue with manually creating an AW – the standard form metadata is not automatically added to the AW and there is no documented process for achieving this. The only reliable solution I have found is to first create the AW via AWM and then export the empty AW to an EIF file. This EIF file will then contain the standard form metadata objects. Once you have deleted and re-created the AW with the based on your specific tablespace and subpartition requirements the standard form metadata can be added by importing the EIF file. Not the prettiest of solutions but it works – at least with 10gR2.

Step 4 - Analysis of Aggregation Model

The Summarize To Tab

This is where the biggest improvements to build time are likely to be uncovered. The “Summarize To” tab allows you to select the levels to pre-solve. Based on the analysis of the number of members and children at each level it should be possible to tune the levels to pre-solve only the most important levels.

This step will require lots of testing and many builds to finally arrive at the best mix of levels.

The Rules Tab

If you are using the same aggregation method across all dimensions, such as SUM, the aggregation engine will optimise the processing order for the dimensions by solving them in the reverse order from highest cardinality to lowest cardinality. Despite this I always manually order the dimensions myself anyway on the Rules Tab.

Where you are using different aggregation methods across the various dimensions it is important to ensure the dimensions are in the correct order to return the desired result. If you change the order to improve aggregation performance where different aggregation methods are used, check the results returned are still correct. Getting the wrong answer very quickly is not a good result.

Step 5 - Analysis of Data Quality

This is another area that can have a huge impact of load times. There are three key things to consider:

- Number of NA cells

- Number of Zero cells

- Sparsity patterns

Where there is a need to show a zero balance, create a separate cube load only the zero balances into that cube but do not aggregate the data. Use a formula to glue the non-zero balance data to the zero balance data, such as:

Nafill(CUBE1_NON_NA_DATA, CUBE2_ZERO_BALANCE_DATA)

This will significantly improve the performance of the main cube since the aggregation engine only has to deal with real balances.

Sparsity patterns are important when you have a cube that contains a large number of measures all sourced from the same fact table. In another situation, a customer had designed two cubes with about 30 measures in one cube and two measures in the other cube. The source fact table contained 75 million rows. The data load was taking about ten hours for just three years of data. Looking at the data and executing various SQL counts to determine the number of NULL cells and Zero cells for each measure, it was clear there were five different sparsity patterns within the fact table.

By breaking the single cube into five different cubes, creating views over the base fact table to only return the relevant columns for each cube and removing all NA and zero values the amount of data being loaded each month declined to the values shown below:

This change combined with changes to the selection of levels pre-aggregated reduced the build and aggregation time by over 50% with little impact on query performance.

It is critical to fully understand the source data and how it is stored. As the number of measures within a cube increases it is likely that the number of times an NA or Zero value appears will also increase. Breaking a large cube up into smaller more focused chunks in this type of scenario can provide significant benefits.

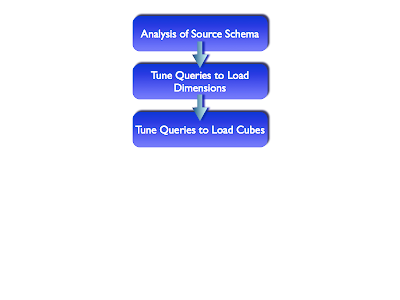

Part 4 - Analysis of Source Schema Queries

When loading data into a cube from a relational source schema you should be able to achieve about 1 million rows updated in the cube per minute. If you are not seeing that level of throughput from the source table/view, you need to look at:

- Hardware issues

- Cube design issues

- Query design issues

Tuning the queries used to load dimension members and data into cubes can be very important. When either a data load or dimension load is executed a program is created containing the SQL to fetch the data from the relational table. It is important to make sure the SQL being executed is as efficient as possible. By using views as the source for your mappings it is relatively easy to add additional hints to ensure the correct execution path is used. Note - with 11g this can cause problems if the cube is to be exposed as a materialised view. For query re-writes to function the cube must use the underlying fact table that is part of the end-user query.

Step 1 - Analysing SQL Statements

To optimise the SQL executed during a load you have use either, or both, of the approaches:

- Enterprise Manager – via Tuning Packs

- Manual analysis

Enterprise Manager

Enterprise Manager can be used to monitor the results from a SQL statement. The Performance Tab provides the environment for tuning SQL statements as well as monitoring the operation of the whole instance. The easiest way to find the SQL statement used by the data load process is to search for a SELECT statement against the view/table used in the mapping. The SQL can quite often be found in the “Duplicate SQL” report at the bottom of the Top Activity page:

Once you have found the SQL statement, clicking on the SQL statement listed in the table will present a complete analysis of that statement and allow you to schedule the SQL Tuning Advisor. The output from the Advisor includes recommendations for improving the efficiency of that statement. Below is the analysis of the resources used to execute the product dimension SQL statement:

Scheduling the Advisor

Scheduling the advisor to analyse your SQL statement is very simple. Click on the button in the top right corner of the SQL Details screen. This will launch the SQL Advisor where you need to provide:

- A description for the job

- Set the scope to either limited or comprehensive (there are on screen notes to help you make this decision)

- Time and date to run the Advisor, since it might not be possible to run the advisor immediately.

Note: These features are costed extensions to the Enterprise Manager console and cannot be used on a production system unless your customer has bought these extensions.

Manual Tuning

So how do you capture the SQL being executed during a data load? In 10g, during a load process an OLAP DML program is created called '___XML_LOAD_TEMPPRG'.

This program contains the code used during the build process and it is relatively easy to capture this code either via Enterprise Manager or manually at the end of the build.

(For a data load in 11g, look at the CUBE_BUILD_LOG’s “output” column. The table is in the AW’s schema).

To manually capture the program code (in 10g), create your own job to manually execute a data load. For example below is a job to refresh the members in the dimension Products. Note the first three lines and last four lines that control the dumping of the program code so we can capture the SQL.

set serveroutput on

exec dbms_aw.execute('aw attach SH_AW rw first');

exec dbms_aw.execute('cda BI_DIR');

call SQL file to refresh cube

exec dbms_aw.execute('outfile loader.txt');

exec dbms_aw.execute('DSC ___XML_LOAD_TEMPPRG')

exec dbms_aw.execute('outfile eof');

exec dbms_aw.execute('aw detach SH_AW');

The resulting program looks like this, with the SQL code highlighted in bold:

DEFINE ___XML_LOAD_TEMPPRG PROGRAM INTEGER

PROGRAM

variable _errortext text

trap on HADERROR noprint

sql declare c1 cursor for -

select SH.VW_PRODUCTS_DIM.PROD_ID, -

SH.VW_PRODUCTS_DIM.PROD_DESC, -

SH.VW_PRODUCTS_DIM.PROD_DESC, -

SH.VW_PRODUCTS_DIM.PROD_PACK_SIZE, -

SH.VW_PRODUCTS_DIM.PROD_WEIGHT_CLASS, -

SH.VW_PRODUCTS_DIM.PROD_UNIT_OF_MEASURE, -

SH.VW_PRODUCTS_DIM.SUPPLIER_ID -

from SH.VW_PRODUCTS_DIM -

where -

(SH.VW_PRODUCTS_DIM.PROD_ID IS NOT NULL)

sql open c1

if sqlcode ne 0

then do

_errortext = SQLERRM

goto HADERROR

doend

sql import c1 into :MATCHSKIPERR SH_OLAP.SH_AW!PRODUCTS_PRODUCT_SURR -

:SH_OLAP.SH_AW!PRODUCTS_LONG_DESCRIPTION(SH_OLAP.SH_AW!ALL_LANGUAGES 'AMERICAN') -

:SH_OLAP.SH_AW!PRODUCTS_SHORT_DESCRIPTION(SH_OLAP.SH_AW!ALL_LANGUAGES 'AMERICAN') -

:SH_OLAP.SH_AW!PRODUCTS_PACK_SIZE -

:SH_OLAP.SH_AW!PRODUCTS_WEIGHT_CLASS -

:SH_OLAP.SH_AW!PRODUCTS_UNIT_OF_MEASURE -

:SH_OLAP.SH_AW!PRODUCTS_SUPPLIER_ID

if sqlcode lt 0

then do

_errortext = SQLERRM

goto HADERROR

doend

sql close c1

sql cleanup

return 0

HADERROR:

trap on NOERR1 noprint

sql close c1

NOERR1:

trap off

sql cleanup

call __xml_handle_error(_errortext)

END

Once you have the statement you can use SQLDeveloper’s explain plan feature to determine the execution plan. When dealing with cubes, it is likely the source fact table will be partitioned; therefore, you need to ensure partition elimination is occurring correctly.

If additional hints need to be added to make the query more efficient, these can be added to the view definition. This approach does not automatically generate recommendations so you will need to have a solid grasp of SQL tuning to ensure your query is based on the most optimal execution plan.

Step 2 – Managing Sort Resources

Sorting the source data is quite important for both dimensions and facts. By default, OLAP sorts dimensions alphabetically in ascending order based on the long description. Therefore, it makes sense for the relational source to provide the data in the required order, especially for the dimension loads.

Optimising cube loads requires making sure the sorting is based on the same order as the dimensions are listed within the partitioned composites. This will be the same order as shown on the implementation details tab.

OLAP load operations are sort intensive. You may need to increase the sort_area_size setting within the database to try and ensure the various sorting operations during a load are performed in memory and not disk. The default setting is 262,144. As part of a load process you can increase the amount of sort memory available as follows:

exec DBMS_AW.EXECUTE('SortBufferSize=10485760');

Executing this command before starting a data load will increase the amount of resources allocated to memory sorts, in this case providing approximately 10Mb of memory. To permanently set the SortBufferSize to 10Mb, issue the following commands:

exec DBMS_AW.EXECUTE('aw attach my_aw_name rwx');

exec DBMS_AW.EXECUTE('SortBufferSize=10485760');

exec DBMS_AW.EXECUTE('update');

exec DBMS_AW.EXECUTE('commit');

exec DBMS_AW.EXECUTE('aw detach my_aw_name');

Or you can simply set the option before executing the XML job definition:

set serveroutput on

exec dbms_aw.shutdown;

exec dbms_aw.startup;

exec dbms_aw.execute('aw attach SH_AW rw first');

exec DBMS_AW.EXECUTE('SortBufferSize=10485760');

call SQL file to refresh cube

exec DBMS_AW.EXECUTE('SortBufferSize=262144');

exec dbms_aw.execute('update;commit');

exec dbms_aw.execute('aw detach SH_AW');

exec dbms_aw.shutdown;

exec dbms_session.free_unused_user_memory;

For more information on this subject area refer to the next session on monitoring system resources.

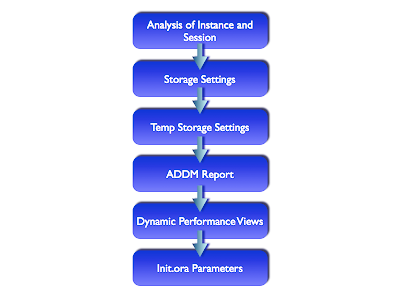

Part 5 - Analyis of the Database

There are a number of areas that are important when tuning a data load process and the areas outlined in this section are really just going to tweak the performance and may or may need result in significant performance improvements. But this area can provide the “icing on the cake” in terms of extracting every last ounce of performance.

Step 1a – Relational Storage Settings

Make sure logging is switched off on the tablespace used to store the AW. Since the AW does not support redo there is not point in generating it. Make sure you have enough space within the tablespace before you start a build. A lot of time can be consumed extending the tablespace if you are not careful.

If you are using Data Guard, it will not be possible to switch of redo. The alternative is to increase REDO Log Size to between 100M and 500M, and also modify LOG_BUFFER parameter to 10M (for example) to allow for more efficient index lob creation, and also try to move TEMP, UNDO and REDO logs to fastest disk.

Step 1b –AW Storage Settings

If the cubes within an AW contain a large number of partitions, then performance can be improved by adding additional physical partitions to AWs. The AW should be logically partitioned and modelled well and then should also be physically partitioned as it improves update performance by reducing index lob contention. For example, if the main data cube contains 36 months of data and is logically partitioned by month in the AW, then the physical partitioning of the AW should match the number of logical partitions. To override the default of eight partitions it is necessary to manually define the AW and set the required number of partitions as show here:

SQL> exec dbms_aw.execute('aw create scott.product_AW partitions 36');

However, this approach does create some additional complications regarding the creation of standard form metadata. This metadata is required to make the AW visible to AWM and other OLAP aware tools. In the vast majority of cases it will be necessary to create a standard form metadata compliant AW. See the Part 3 Analyis of Cube Model, Step 3 – Partitioning for more information.

Step 2 - Temp Storage Settings

Pre-allocating space within the temp tablespace prior to running a build can make some performance improvements. When pre-allocating space make sure the temp tablespace is not set to auto-extend and the correct (most efficient) uniform extend size is used. The procedure below will pre-allocate TEMP Tablespace. Alter the for i in 1..1000000 are required. This example will pre-allocate approximately 1.5GB of TEMP tablespace. Make sure your default temporary tablespace/group is not set to auto-extend unlimited. It should be fixed to the required size.

create or replace procedure preallocate_temp as

amount integer := 26;

buffer varchar2(26) := 'XXXXXXXXXXXXXXXXXXXXXXXXXX';

done boolean := false;

out_of_temp exception;

position integer := 10240;

pragma exception_init(out_of_temp,-01652);

tmppre clob;

begin

dbms_lob.createtemporary(tmppre, true,d bms_lob.session);

dbms_lob.open(tmppre, dbms_lob.lob_readwrite);

for i in 1..130400

loop

if (done = true) then

dbms_lob.close(tmppre);

dbms_lob.freetemporary(tmppre);

end if;

begin

dbms_lob.write(tmppre, amount, position, buffer);

exception when out_of_temp then done := true;

end;

position := position + amount + 10240;

end loop;

dbms_lob.close(tmppre);

dbms_lob.freetemporary(tmppre);

end;

/

conn prealltemp/oracle

exec preallocate_temp;

disc;

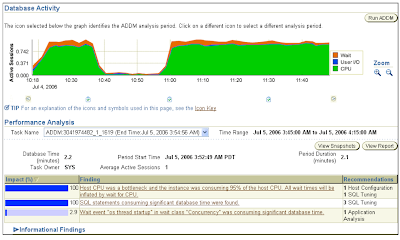

Step 3 - ADDM Report

ADDM (Automatic Database Diagnostic Monitor) is a self-diagnostic engine built into the Oracle Database kernel, which automatically detects and diagnoses common performance problems, including:

- Hardware issues related to excessive I/O

- CPU bottlenecks

- Connection management issues

- Excessive parsing

- Concurrency issues, such as contention for locks

- PGA, buffer-cache, and log-buffer-sizing issues

- Issues specific to Oracle Real Application Clusters (RAC) deployments, such as global cache hot blocks and objects and interconnect latency issues

Enterprise Manager or SQLPlus. For tuning OLAP data loads, ADDM is always a good place to start. In addition to diagnosing performance problems, ADDM recommends possible solutions. When appropriate, ADDM recommends multiple solutions, which can include:

- Hardware changes

- Adding CPUs or changing the I/O subsystem configuration

- Database configuration

- Changing initialization parameter settings

- Schema changes

- Hash partitioning a table or index, or using automatic segment-space management (ASSM)

- Application changes

- Using the cache option for sequences or using bind variables

- Using other advisors

- Running the SQL Tuning Advisor on high-load SQL statements or running the Segment Advisor on hot objects

ADDM benefits apply beyond production systems; even on development and test

Systems. ADDM can provide an early warning of potential performance problems. Typically the results from an ADDM snapshot are viewed via various interactive pages within Enterprise Manager, as shown below:

Alternatively you can access ADDM reports using the SQL*Plus command line by calling the new DBMS_ADVISOR built-in package. For example, here's how to use the command line to create an ADDM report quickly (based on the most recent snapshot):

set long 1000000

set pagesize 50000

column get_clob format a80

select dbms_advisor.get_task_report(

task_name, 'TEXT', 'ALL')

as ADDM_report

from dba_advisor_tasks

where task_id=(

select max(t.task_id)

from dba_advisor_tasks t, dba_advisor_log l

where t.task_id = l.task_id

and t.advisor_name='ADDM'

and l.status= 'COMPLETED');

The ‘ALL’ parameter generates additional information about the meaning of some of the elements in the report. The most interesting section of the report relates to the "Findings" for each issue. This outlines the impact of the identified problem as a percentage of DB time, which correlates with the expected benefit, based on the assumption the problem described by the finding will be solved if the recommended action is taken.

In the example below the recommendation is to adjust the sga_target value in the parameter file:

FINDING 3: 5.2% impact (147 seconds)

---------------------------------------

The buffer cache was undersized causing significant additional read I/O.

RECOMMENDATION 1: DB Configuration, 5.2% benefit (147 seconds)

ACTION: Increase SGA target size by increasing the value of parameter "sga_target" by 24 M.

SYMPTOMS THAT LED TO THE FINDING:

Wait class "User I/O" was consuming significant database time. (5.3% impact [150 seconds])

...

To get more information this feature refer to the Oracle® Database 2 Day + Performance Tuning Guide 10g Release 2 (10.2).

For the HTML version, click here for 10gR2 and here for 11g.

For the PDF version, click here for 10gR2 and here for 11g

Step 4 - Dynamic Performance Views

Each Oracle database instance maintains a set of virtual tables that record current database activity and store data about the instance. These tables are called the V$ tables. They are also referred to as the dynamic performance tables, because they store information relating to the operation of the instance. Views of the V$ tables are sometimes called fixed views because they cannot be altered or removed by the database administrator. The V$ tables collect data on internal disk structures and memory structures. They are continuously updated while the database is in use. The SYS user owns the V$ tables. In addition, any user with the SELECT CATALOG role can access the tables. The system creates views from these tables and creates public synonyms for the views. The views are also owned by SYS, but the DBA can grant access to them to a wider range of users.

Among these are tables that collect data on OLAP operations. The names of the OLAP V$ views begin with V$AW:

- V$AW_AGGREGATE_OP

- Lists the aggregation operators available in the OLAP DML.

- V$AW_ALLOCATE_OP

- Lists the allocation operators available in the OLAP DML.

- V$AW_CALC

- Collects information about the use of cache space and the status of dynamic aggregation.

- V$AW_LONGOPS

- Collects status information about SQL fetches.

- V$AW_OLAP

- Collects information about the status of active analytic workspaces.

- V$AW_SESSION_INFO

- Collects information about each active session.

V$AW_CALC

This reports on the effectiveness of various caches used by Oracle OLAP and the status of processing by the AGGREGATE function. Oracle OLAP uses the following caches:

- Aggregate cache: An internal cache used by the aggregation subsystem during querying. It stores the children of a given dimension member, such as Q1-04, Q2-04, Q3-04, and Q4-04 as the children of 2004.

- Session cache: Oracle OLAP maintains a cache for each session for storing the results of calculations. When the session ends, the contents of the cache are discarded.

- Page pool: A cache allocated from the User Global Area (UGA), which Oracle OLAP maintains for the session. The page pool is associated with a particular session and caches records from all the analytic workspaces attached in that session. If the page pool becomes too full, then Oracle OLAP writes some of the pages to the database cache. When an UPDATE command is issued in the OLAP DML, the changed pages associated with that analytic workspace are written to the permanent LOB, using temporary segments as the staging area for streaming the data to disk. The size of the page pool is controlled by the OLAP_PAGE_POOL initialization parameter.

- Database cache: The larger cache maintained by the Oracle RDBMS for the database instance.

Because OLAP queries tend to be iterative, the same data is typically queried repeatedly during a session. The caches provide much faster access to data that has already been calculated during a session than would be possible if the data had to be recalculated for each query.

The more effective the caches are, the better the response time experienced by users. An ineffective cache (that is, one with few hits and many misses) probably indicates that the data is not being stored optimally for the way it is being viewed. To improve runtime performance, you may need to reorder the dimensions of the variables (that is, change the order of fastest to slowest varying dimensions).

V$AW_LONGOPS

This view will identify the OLAP DML command (SQL IMPORT, SQL FETCH, or SQL EXECUTE) that is actively fetching data from relational tables. The view will state the current operation based on one of the following values:

- EXECUTING. The command has begun executing.

- FETCHING. Data is being fetched into the analytic workspace.

- FINISHED. The command has finished executing. This status appears very briefly before the record disappears from the table.

For more information refer to the Oracle OLAP Option Users Guide, Section 7 Administering Oracle OLAP – Dynamic Performance Views.

Step 5 - Init.Ora Parameters

Checking the RDBMS parameters are appropriately tuned for your OLAP environment is relatively easy. Fortunately in 10g the majority of init.ora parameters are managed dynamically, however a few parameters that may need to be changed are:

SORTBUFFERSIZE

This should be increased since OLAP AWs use this parameter instead of SORT_AREA_SIZE. So, for every AW, to increase it do the following:

exec DBMS_AW.EXECUTE('aw attach SCOTT.MYAW rwx');

exec DBMS_AW.EXECUTE('shw sortbuffersize');

262,411

exec DBMS_AW.EXECUTE('SortBufferSize=10485760');

exec DBMS_AW.EXECUTE('shw sortbuffersize');

10,485,760

exec DBMS_AW.EXECUTE('aw detach SCOTT.MYAW');

OLAP_PAGE_POOL_SIZE

This should be set to 0 or unset so that auto dynamic page pool is on and is managed by the database (will be set to 50% of PGA size). However, if you have over 8Gb of memory available then you should set the parameter manually and a good value for data loading is to set to 256MB and for multiple users querying concurrently, 64MB.

5 comments:

Hi Keith,

I would first like to thank you for this wonderful article. This is a brilliant attempt to summarize the OLAP Tuning considerations and ensuing efforts. I have been working on many aspects of OLAP cubes and have implemented the fine-tuning recommendations quite often, however your article propelled me to go even further with my efforts. I would like to seek your opinion on the following points (as mentioned in your article) going by my observations through my OLAP journey. Please note that for all the points that follow, my discussion is limited to Oracle Database 10g Enterprise Edition Release 10.2.0.3.0 and AWM v10.2.0.3A.

1. Global Composites:

When it comes to cube build time or the query performance, I have not been able to replicate a situation wherein the inclusion or exclusion of Global Composites has been of any noticeable effect. I have experimented with cubes involving 8-12 dimensions and more than a dozen measures but never

saw any difference in cube build/load or query performance by using or not using Global Composites. This makes me ponder if the usage/non-usage of "Global Composites" should even be a candidate for the fine-tuning efforts.

2. Order of dimensions in implementation tab and rules tab:

I experimented with a 9-dimension cube by creating it in two different ways:

->a. The order of dimensions in the implementation tab and rules tab was from the most populous to the one with least number of members.

->b. The order of dimensions in the implementation tab and rules tab was from the one with least number of members to the one with maximum number of members.

In either case (a and b as above), there was no appreciable difference in cube load time or query performance. Using both the above methods, I was able to load about 1.5 million records per minute into my cube. Also, for both the methods, compression option was used.

3. Partitioning the cube on "Month" level of "TIME" dimension:

I really would like to go with this recommendation, but owing to the data volume of my OLAP system (about 1.5 million records per day), I guess it becomes too much for the OLAP engine to load and aggregate for all levels that I need it to do - I have been running into ORA-600 issues with this particular experiment which is being attributed to a bug. This being a different story all together - I would rather wait for the bug to be fixed rather than

framing any opinion on this. For now, I work with partitioning at the "week" level.

4. Temp Tablespace is not set to Autoextend / Unlimited: (Temp Storage Settings)

This recommendation actually surprised me a bit - this is because going by my interaction with Oracle Support for the SR related to point 3 above, I

come to understand that Oracle OLAP demands Temp Tablespace to be set to Autoextend and Unlimited. However, I tend to agree with your recommendation because I feel so far as we are in a position to monitor our OLAP system for its memory usage and allocate (or rather pre-allocate)

enough memory going by its usage trends, it may not be very necessary to have the Temp Tablespace set to Autoextend and Unlimited. This is actually how we manage the Temp storage settings in our environment.

Well, these are just some of those points that often come to my mind while working with Oracle OLAP and sometimes there seems to be a major conflict between the way things are recommended to work and the way they actually work. I do understand that there might be a lot of things that depend on the particular data model and application scenario that I have at hand, however, I am seeking your valuable guidance so as to reinforce my efforts of "tweaking" and "twisting" the Oracle OLAP option.

Once again, thanks a lot for this valuable article.

Best Regards,

Piyush

Hi Piyush,

Thanks for the feedback. These are interesting observations and I will update my "internal technical paper" with your feedback. Here are my thoughts..

1) Global composites

The main use for global composites is to help tuning the SQL access layer where users want to supress NA rows. The composite provides a single densified object that can be used to controll looking within the OLAP_TABLE function.

There is no data held against the composite, it should be viewed simply as an index. This index is updated during the data loading phase of a cube refresh but has no impact on the aggregation phase.

My view is "never do more work than you have to", therefore, if you do need a global composite, do not implement it. It might have a minimal impact on load times but I would expect it to have some impact especially as the size of the composite grows. For very small incremental updates it might have minimal impact (say loading less than 50 million rows) but for large datasets (for example during a data take-up phase) it could have an impact. You also have to bear in mind there is a fixed limit to the size of the composite object - one of the reasons for using partitioning to create smaller composites - and while this is much larger compared to the old Express days there is still a limit. I am not sure what that limit is, although it is extremely high figure.

2. Order of dimensions

This is more a theory I have about aggregation performance. It is not well tested yet. However, I understand from development that when a cube has a consistent aggregation method (such as SUM) and compression is used, the engine automatically determines the most optimal aggregation process. Where you have mixed aggregation methods (SUM and MAX for example) it should be possible to achieve improved aggregation performance by changing the order of the dimensions as listed on the Rules tab - making sure you still get the correct result!

3. Partitioning

I have also seen ORA-600 issues when loading data. For this reason I tried to make clear the difference between data take-up and incremental loads. The volume of data generated during an data take-up can be enormous and with the commit phase only occuring at the end this can cause space problems within the TEMP tablespace.

For incremental loads I always try to break things down into very small chunks and then slowly increase the size of those incremental chunks to determine an optimal load size.

The interesting part here is the impact of partitioning and the size of the default partition vs the size of the other partitions. I am wondering in your case if the default partition is getting too large. I did have a similar problem with another customer and resolved it by flattening the partition dimension (may be something for another post). I can give you more details if you need them, email me: keith.laker@oracle.com

4. Tablespaces

I never like to use auto-extend because I think you lose control over your environment. It is always wise to monitor resource usage rather than letting the server just grab whatever it needs. But that is a personal preference.

Keith

Hi Keith,

Increasing the sortbuffersize fixed a "string index out of range" error I was receiving when trying to sort a large dimension.

thanks for the informative article.

Mike

Hi Keith,

I've been working on Oracle Express OLAP 6.3.4 for a long time and recently we started develop in 10g

Here began our fight with performance issues, - particularly accessing OLAP cubes using Oracle Discoverer

It takes long time (more then 2 minutes) open OLAP workpages in Discoverer.

We contacted Oracle on this issue but no feasible answer so far. They directed us to next Oracle 11g version

where, as they said, - lots of things done for performance improvement.

Our environment is:

Oracle Database 10g Enterprise Edition Release 10.2.0.3.0,

OracleBI Discoverer 10g (10.1.2.3),

Analytic Workspace Manager 10.2.0.3

There are many articles with Discoverer performance found on web but no solid solution so far.

At that point I would like ask you if you have had experience with the Discoverer and if not -

could suggest some sources with the solutions on front-end reporting?

Thanks,

Oleg

About Discoverer and OLAP - I seem to remember that in 10g opening the first OLAP report in Discoverer (Plus and Viewer) could take some time because the initial load of the first worksheet has to attach the AW and validate the AW metadata.

I would recommend reviewing the following:

1) checking the number of objects in your AW - the more objects you have in the AW the longer this will take to validate when the AW is attached for the first time.

2) Make sure the selection scripts you have created for your reports are not overly complicated. I think in 10g you could improve the performance of many reports by deleting all the selection scripts, saving the report and then defining new selection scripts that return the correct list of dimension members. I think if you "prototype" your reports building up the scripts over a number of attempts then this could create very large selection scripts where many of the steps were not actually needed. Creating new reports and defining the steps in one go could help.

In general 11g will be much faster and the issue with reading the all AW metadata during the first attach has been much improved.

Hope this helps.

Post a Comment