- Oracle BIEE 10g and 11g (see http://oracleolap.blogspot.com/2010/07/first-look-at-obiee-11g-with-oracle.html ) and

- Oracle BI Discoverer Plus OLAP (see http://oracleolap.blogspot.com/2010/08/discoverer-olap-is-certified-with-olap.html ),

Thursday, December 2, 2010

Simba previews Cognos8 Analysis Studio accessing Oracle Database OLAP Option cubes

Tuesday, November 16, 2010

Simba previews Oracle OLAP MDX Provider connectivity to SAP BusinessObjects Voyager

This will be a great capability for users of both Oracle OLAP and BusinessObjects and will futher extend the reach of Oracle database embedded OLAP cubes.

You can get more details on the Simba website

Thursday, November 4, 2010

Microsoft Certifies Simba’s MDX Provider for Oracle OLAP as “Compatible with Windows 7”

Friday, October 22, 2010

Cell level write-back via PL/SQL

Since the first OLAP Option release in 9i it has always been possible to write-back to cubes via the Java OLAP API and OLAP DML. But in recent releases, a new PL/SQL package based API has been developed. My thanks go to the ever-excellent David Greenfield of the Oracle OLAP product development group for bringing this to my attention.

At the most simple level, it is possible to write to a qualified cell:

dbms_cube.build(

'PRICE_COST_CUBE USING (

SET PRICE_COST_CUBE.PRICE["TIME" = ''24'', PRODUCT = ''26''] = 711.61, SOLVE)')

In the example above, a cube solve is executed after the cell write. The objects are referenced by their logical (ie. AWM) names.

This approach is very flexible. For example you can qualify only some dimensions, in this case the assignment is for all products:

dbms_cube.build(

'PRICE_COST_CUBE USING (

SET PRICE_COST_CUBE.PRICE["TIME" = ''24''] = 711.61, SOLVE)')

You can also skip the aggregation:

dbms_cube.build(

'PRICE_COST_CUBE USING (

SET PRICE_COST_CUBE.PRICE["TIME" = ''24'', PRODUCT = ''26''] = 711.61)')

or run multiple cell updates in one call:

dbms_cube.build(

'PRICE_COST_CUBE USING (

SET PRICE_COST_CUBE.PRICE["TIME" = ''24'', PRODUCT = ''26''] = 711.61,

SET PRICE_COST_CUBE.PRICE["TIME" = ''27'', PRODUCT = ''27''] = 86.82,

SOLVE)');

You can also copy from one measure to another.

dbms_cube.build('UNITS_CUBE USING (SET LOCAL_CUBE.UNITS = UNITS_CUBE.UNITS'));

This will copy everything from the UNITS measure in UNITS_CUBE to the UNITS measure in the LOCAL_CUBE. You can put fairly arbitrary expressions on the right hand side and the code will attempt to loop the appropriate composite. You can also control status.

For more details, take a look at the PL/SQL reference documentation

Thursday, October 7, 2010

Incremental Refresh of Oracle Cubes

- The cube will load all data from the source table.

- You can limit the data loaded into the cube by a) presenting new/changed data only via a staging table or filtered view or b) making the cube refreshable using the materialized view refresh system and using a materialized view log table.

- The cube will understand if data has been loaded into a partition. If data has not been loaded into a partition, it will not be processed (aggregated).

- If a partition has had data loaded into it, it will processed (aggregated).

- The cube will understand if a loaded value is new, changed or existing and unchanged. Only new or changed values are processed (the cube attempts to aggregate only new and changed cells and their ancestors).

- Changing parentage of a member in a non-partitioned dimension will trigger a full solve of the cube (across all partitions).

- If a member is added to a non-partitioned dimension, the cube will attempt an incremental aggregation of that dimension (that is, the new member and ancestors only).

Here are two scenarios that illustrate how this works.

1) How to trigger a lot of processing in the cube during a refresh:

- Load from the full fact table rather than a staging table, filtered view or MV log table. The full table will be loaded into the cube.

- Load data into many partitions. Each partition will need to be processed. For example, load data for the last 36 months when the cube is partitioned by time.

- Load data into large partitions. For example, partition by year or quarter rather than quarter or month. Smaller partitions will process more quickly.

- Make frequent hierarchy (parentage) changes in dimensions. For example, realign customers with new regions or reorganize the product dimension during the daily cube refresh. This will trigger a full cube refresh.

2) How to efficiently manage a daily cube refresh:

- Load from staging tables, filtered views or MV log tables where the tables/views contain only new or updated fact data. This will reduce the load step of the build. This becomes more important the larger the fact table is.

- Use as fine grained partitioning strategy as possible. This will result in smaller partitions, which process more efficiently (full or incremental refresh) and offer more opportunity for parallel processing. Also, it is likely that fewer partitions will be processed.

There can be a trade off with query performance. Typically, query performance is better when partitions are at a higher level (e.g., quarter rather than week) because there may be fewer partitions to access and less dynamic aggregation might be required. That said, the gain in refresh performance is typically much greater than the loss in query performance. Building a cube twice as fast is often more important than a small slowdown in query performance.

- Only add new data into the partitioned dimension. For example if the cube is partitioned by time, add data only for new time periods. Only the partitions with those time periods will be refreshed.

Clearly, there are many cases where data must be added to the non-partitioned dimensions. For example, new customers might be added daily. This is ok because a) new members are processed incrementally and b) new customers will likely affect only more recent partitions.

Schedule hierarchy realignments (e.g., changing parentage in product, geography and account type dimensions) weekly instead of daily. This will limit the number of times a full refresh is required. It might also allow you to scheduled the full refresh for a time where the availability of system resources is high and/or the query load is low.

The above scenarios help you understand how to most efficiently refresh a single cube. Also consider business requirements and how model the overall solution Two scenarios follow.

1) Data is available at the day, item and store levels in the fact table. The business requirements are such that all data must be available for query, but in practice most queries (e.g., 95% or more) are at the week, item and store levels.

Consider a solution where data is loaded in the cube at the week, item and city levels and more detailed data (day, item and store levels) are made available by drilling through to the table. This is very easy to do in a product such as Oracle Business Intelligence (OBIEE) or any other tool that has federated query capabilities and will be transparent to the business user.

In this case, the cube is simply smaller and will process more quickly. The compromise is that calculations defined in the cube are only available at the week, item and city levels. This is often a reasonable trade off for faster processing (and perhaps more frequent updates).

2) Data is available at the day, item and store levels in the fact table. The business requirements are such that all data must be available for query, but in practice:

- Longer term trending (e.g., year over year) is done at the week level or higher.

- Analysis of daily data (e.g., same day, week or month ago) is only done for the most recent 90 days.

In this scenario, consider a two cube solution:

- Cube A contains data at the week, item and store levels for all history (e.g., the past 36 months). This might be partitioned at the week level and aggregated to the month, quarter and year levels. Depending on reporting requirements, it might only be refreshed at the end of the week when the full week of data is available.

- Cube B contains data only at the day level for the most recent 90 days. It is not aggregated to the week, month, quarter and year levels (aggregates are serviced by Cube A). This cube is used for the daily sales analysis. This might be partitioned at the day level so that a) any full build of the cube can be highly parallelized and b) the daily update processes only a single and relatively small partition.

Using a tool such as Oracle Business Intelligence, which has federated query capabilities, a single business model can be created that accesses data from both cubes and the table transparently to the business user. When ever the user is querying at the week level or above, data OBIEE queries Cube A. If data is queried at the day level within the most recent 90 days, OBIEE queries Cube B. If data at the day level that is older than 90 days is access, OBIEE queries the table. Again, this can all be transparent to the user in a tool such as Oracle Business Intelligence.

Saturday, September 18, 2010

Simba Technologies and Vlamis Software Solutions hosting special reception at OpenWorld 2010

Friday, September 17, 2010

Special OpenWorld 2010 Cocktail Reception

Simba product managers will also be available at the Oracle OLAP pod in Moscone West to answer questions you might have about Excel Pivot Tables and the MDX Provider for Oracle OLAP.

Tuesday, August 10, 2010

Discoverer OLAP is certified with OLAP 11g

If you are interested, you can download it from OTN under Portal, Forms, Reports and Discoverer (11.1.1.3.0)

An updated version of the BI Spreadsheet add-in has been released too and can also be downloaded from OTN

Tuesday, July 20, 2010

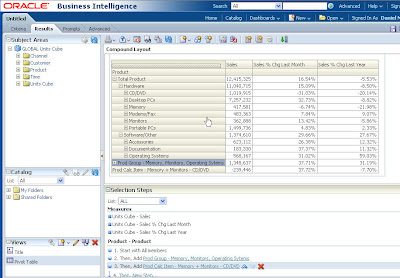

A first look at OBIEE 11g with Oracle OLAP

And the fantastic news for Oracle OLAP customers is that OBIEE 11g will work out-of-the-box with Oracle OLAP in almost exactly the same way as OBIEE 10g does - with just one additional configuration step required to enable the new OLAP-style front-end functionality.

Of course, there are other features that are relevant such as the WebLogic application server, and the new security model, but these have already been well blogged elsewhere so the focus of this posting will be Oracle OLAP integration.

To illustrate how easy it is, I will use a trusted old friend as a starting point - the 11g Global sample schema. I have installed this in an Oracle 11.2 database instance, created an Oracle OLAP Analytic Workspace, and then refreshed this AW so that the dimensions and cubes are built.

With an AW in place, the next step is to use the OBIEE plug-in for AWM to generate the metadata required for the OBIEE Server. For those who have not used the plug-in before, check out this excellent demonstration of how it works. While this particular version of the plug-in was originally released to work with OBIEE 10g, and presumably an updated version will be released in due course, it can be used in exactly the same way in OBIEE 11g to import metadata into the Administration tool.

And at first glance, aside from a few updated icons, this version of the Administration tool looks very similar, but the biggest change related to the administration of OLAP data sources (relational or MOLAP) is the ability to map hierarchy objects right through into the presentation layer.

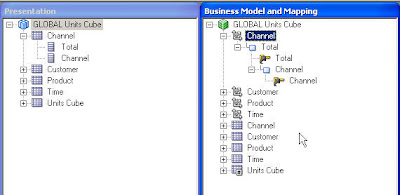

Here is the Metadata generated by the plug-in for the Channel Dimension in both the Business Model and Presentation layers

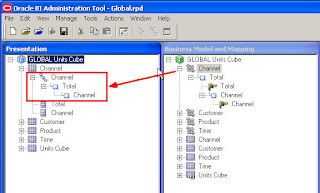

The new 11g OLAP-style front-end functionality is enabled by adding these hierarchies into the Presentation layer too. This can be achieved by a simple click-and-drag for each hierarchy like the following which is again for the Channel Dimension

Hopefully, the next release of the plug-in will handle this additional step automatically (and also provide support for value-based hierarchies which were not supported by the front-end in OBIEE 10g) but in the meantime it really is just a simple click-and-drag for each dimension.

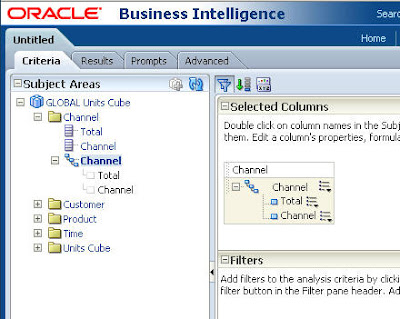

Once all the hierarchies are mapped through into the Presentation Layer, the cube is ready to query. I can log into the OBIEE 11g home page and create a new analysis based upon my Oracle OLAP subject area. The new hierarchies are available for selection when I construct a query

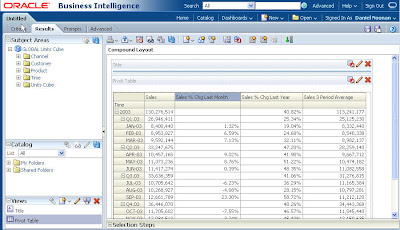

I can then select all of the 'columns' I need for my query and view the results as a pivot table. Here is a really simple example showing Sales by Time. I have also added some calculated measures which have been created inside the AW and derive really useful analytics from the Sales measure. This is a classic reason for using the OLAP Option in the first place - it facilitates the easy creation of calculations that are difficult (or often impossible) to express in SQL. And by having them embedded in the cube, the only thing that the SQL tool (in this case OBIEE) needs to do is select the calculation as a field in a view. How easy is that?!

Once a pivot table with Hierarchy-based columns has been created, this is where the new front-end features really come into play. Some highlights include Calculated Items (derived Dimension members) and a new Selector (which allows dimension selections to be built up as a series of steps based upon add/keep/remove logic):

For those familiar with Discoverer OLAP, or Sales Analyzer, Financial Analyzer and Express Objects/Analyzer, these aren't exactly revolutionary features, but combined with all the other great features of the OBIEE suite, this is now a very compelling platform for your Oracle OLAP data.

Finally, I would guess that there are probably thousands of old Oracle Express/OLAP systems that have been waiting for a BI platform like this. If you work on one, what are you waiting for?

***OBIEE 11g can now be downloaded from OTN***

Friday, June 18, 2010

Bissantz DeltaMaster - Cool Tool for OLAP

I recently returned from a trip to Germany where visted a Bissantz, a relatively small company in Nürnburg that develops and markets an interesting reporting and data visualization tool named DeltaMaster that works with Oracle OLAP (and other data sources). I was very impressed with this tool. There are few things that I really liked about it:

- It's very good at displaying a lot of information within a single report. One of the ways that it does this is by mixing graphical representations of data with numerical representation (they are very big on something called 'Sparklines'). This makes it very easy to create a single report that includes data on, for example, sales for the current quarter but also provides indications of sales trends and shares.

- The presentation of data is very clean. While the reports themselves are very sophisticated, the developers have done a terrific job of presenting them to users. The presentation tends to be more functional than fluffy, but it's done very well. It is easy on the eyes.

- DeltaMaster goes way beyond basic cross tabs and charts. There are prebuilt reports / analysis templates for rankings, concentration analysis, portfolio analysis, etc. There's quite a few different types of pre-built analysis and I won't try to do justice to them here. See for yourself.

- It works better on OLAP than tables. I'm obviously biased when it comes to this topic, but for the end user this means more analytic power and flexibility.

Below is a concentration analysis report. This is along the lines of a Pareto chart. There are many different types of built-in analysis, but this one looks nice in the confined space of this blog's page.

The DeltaMaster page at Bissantz: http://www.bissantz.com/products/

A clever blog by Bella, the Bissantz company dog: http://www.bella-consults.com/

Bella, if you happen to find your way to this blog, here's a 'hello' from Henry (the OLAP product manager's dog).

Thursday, May 6, 2010

Time Dimensions with Hourly Time Periods

First, a little implementation detail. The data was supplied in the fact and dimension tables at the Hour level with a TIMESTAMP data type. As you might expect then, there were time periods at the hour level such as:

02-JAN-10 10.00.00.000000000 AM

02-JAN-10 11.00.00.000000000 AM

02-JAN-10 12.00.00.000000000 PM

02-JAN-10 01.00.00.000000000 PM

02-JAN-10 02.00.00.000000000 PM

In my first attempt at building the time dimension I loaded hours directly from TIMESTAMP data type. In that case, the members at Hour level were loaded into the dimension stripped of the hour (e.g., 02-JAN-10). Since this isn't what I wanted, I converted the hours into a CHAR as follows:

CREATE VIEW time_dim_view AS

SELECT

TO_CHAR(hour_id, 'DD-MON-YYYY HH24') AS hour_id,

TO_CHAR(hour_id, 'DD-MON-YYYY HH24') AS hour_desc,

hour_time_span,

hour_id AS hour_end_date,

.. and so on.

This gave me dimension members at hour as follows:

01-JAN-2010 00

01-JAN-2010 01

01-JAN-2010 02

01-JAN-2010 03

01-JAN-2010 04

That worked just fine. I did the same for the descriptions (so that they would be more easily readable by end users) and added a corresponding column to a fact view so that the time view and fact view joined correctly on the TO_CHAR(...) columns.

For the TIME SPAN attribute, I used a fractional value of DAY (0.041667, which is 1/24th of a day). I read the DATETIME into the END DATE attribute as is (no conversion required). From there on, everything worked perfectly (cube builds, time series calculations, etc).

If you happen to look at the END DATE attribute from the OLAP DML side, be sure to wrap the END_DATE object in a TO_CHAR function so that you see the hours. Otherwise, you will see only the day in most cases (it depends on the NLS_DATE_FORMAT setting for the session). For example:

REPORT DOWN TIME TO_CHAR(TIME_END_DATE 'DD_MON_YYYY HH24')

The other thing that was interesting has more to do with the application design. As so often happens, the customer was inclined to build one cube with all history at the hour level (two years of history). When examining the reporting requirements, however, it turned out that hour level analysis very rarely occurs more than 2 month back. Almost all of the reporting looking back over the two years was at the day level or higher (that is, not hourly level reporting).

We could have built the one cube (two years, hour and higher), but most of the processing of hour level data would have been a waste because users don't look at the older data at that level. Instead, we built a very efficient application with two cubes. One cube contained only three months of data at the hour, day, month, quarter and year levels. Another cube contained two years of history starting at the day level.

Presentation of the data is mostly done using Oracle Business Intelligence Enterprise Edition(via SQL to the cube). Some reports examine hourly level data. Other reports examine more aggregate data over longer time periods. Time series calculations (e.g., period to date, moving average, etc.) were added to both cubes and made available in the OBIEE reports.

Occasionally, a user will want to drill from day to hour more than three months back. To support this, OBIEE was set up to drill from day (in the two year cube) to hour in the fact table. The only compromise was that the time series calculations of the cube were not available when drilling to hour in the fact table. That didn't matter to these users.

From the end user perspective, the fact that there were two cubes instead of one (as well as a fact table) was completely irrelevant since OBIEE presented all data in reports in a single dashboard. From a processing perspective, the system was much more efficient and manageable as compared to the single big cube approach.

It is very worthwhile to keep this lesson in mind when you design your applications. Pay careful attention to reporting requirements and build cubes that meet those requirements. You can tie multiple cubes together in a tool such as OBIEE. This approach is often much better then building a single cube every level of detail.

In this case, the example is about what level of detail is in which cube. The same concept applies to dimensions. You might find it much more efficient to build Cube 1 with dimensions A, B, C and D and Cube 2 with dimensions A, B, E and F rather than one big cube with all dimensions.

Tuesday, March 16, 2010

Parallel Execution of OLAP DML

Here's a code sample that illustrates how to process partitions of a cube in parallel. For this example, assume that the cube has four dimensions: time, product, geography and channel. The cube is partitioned on the time dimension at the retail year level. An OLAP DML program is used to assign data into the cube at the day level (it will be aggregated later).

" Attach the AW

AW ATTACH global_business multi

" Set status of time to match the partition. In this example, the partition is 'P3'

LIMIT time TO PARTITION(sales_prt_template) EQ 'P3'

" Keep data at the day level.

LIMIT time KEEP time_levelrel 'DAY'

" Set status of other dimensions.

LIMIT product TO product_levelrel 'ITEM'

LIMIT geography TO geography_levelrel 'STATE_PROVINCE'

LIMIT store TO store_levelrel 'STORE'

" Acquire the partition for write.

ACQUIRE RESYNC sales_stored_stored (PARTITION p3)

" OLAP DML assignments.

sales_stored(sales_measure_dim 'DOLLAR_SALES') = sales_stored(sales_measure_dim 'QUANTITY') * price_stored(price_measure_dim 'PRICE') across sales_stored_prt_template

" Update and commit to save work.

UPDATE multi

COMMIT

" Release the partition.

RELEASE sales_stored (partition p3)

" Detach the AW

AW DETACH global_business

The important points are:

- The attach mode is MULTI

- Use ACQUIRE to attach the appropriate partition.

- The PARTITION function can be used to set status of the partitioned dimension to the dimension members in the partition.

- Use the MULTI keyword with UPDATE.

- RELEASE the partition.

- While it doesn't have anything to do with running this in parallel, "across sales_stored_prt_template" will cause the OLAP DML to loop the local composite index of the partition rather than loop over the base dimensions.

Repeat this code (or use parameters) for each partition. To run in parallel, run these commands in seperate database sessions for each partition. The above code sample is pure OLAP DML. In practice, it makes sense to execute this in the context of PL/SQL that you can call in separate SQL Plus sessions. E.g.,

BEGIN

-- Attach the AW in multi-write model.

dbms_aw.execute('AW ATTACH global_business multi');

-- Set status of time to match the partition.

dbms_aw.execute('LIMIT time TO PARTITION sales_prt_template) EQ ''P1''');

dbms_aw.execute('LIMIT time KEEP time_levelrel ''DAY''');

-- Set status of other dimensions.

dbms_aw.execute('LIMIT product TO product_levelrel ''ITEM''');

dbms_aw.execute('LIMIT geography TO geography_levelrel ''STATE_PROVINCE''');

dbms_aw.execute('LIMIT store TO store_levelrel ''STORE''');

-- Aquire the partition for write.

dbms_aw.execute('ACQUIRE RESYNC sales_stored_stored (PARTITION p1)');

-- OLAP DML assigment.

dbms_aw.execute('sales_stored(sales_measure_dim ''DOLLAR_SALES'') = sales_stored(sales_measure_dim ''QUANTITY'') * price_stored(price_measure_dim ''PRICE'') across sales_prt_template');

-- Update to save work.

dbms_aw.execute('UPDATE multi');

-- Release the partition.

dbms_aw.execute('RELEASE sales_stored_stored (partition p1)');

-- Detach the AW

dbms_aw.execute('AW DETACH global_business');

END;

/

COMMIT;

If this is done with PL/SQL, then you can run a shell script such as this to run all partitions in parallel.

sqlplus olaptrain/***** @set_sales_cost_p1.sql &

sqlplus olaptrain/***** @set_sales_cost_p2.sql &

sqlplus olaptrain/***** @set_sales_cost_p3.sql &

sqlplus olaptrain/***** @set_sales_cost_p4.sql &

Tuesday, February 23, 2010

Excel and Oracle OLAP - Reporting No-Agg Measures

Here's a situation that's been reported as a bug, but you really just need to know the right Excel Pivot Table option to choose. Consider a cube that has measures that do not aggregate but is dimensioned by a dimension with a hierarchy. In this case, there is a cube with a Store dimension with levels Store > Store Type > All Stores. The stores are located in different countries and sell in local currencies. There is a Local Currency measure, with sales reported in whatever the local currencies might be (Euros, Dollars, Yen, etc.) and a Dollar Sales measure with the U.S. Dollar conversation. As a common currency, Dollars can be aggregated. Local currencies can't be aggregated.

Here's a sample report in Excel.

Note that Dollar Sales is reported for Direct and Indirect but Local Sales is not. That's correct because Local Sales doesn't aggregate.

But what if I happen to select only Local Sales (which is null at the aggregate members Direct and Indirect). By default, Excel will display the report as shown below.

This isn't very useful because I can't drill down on the Direct member to get at the stores. The solution is simple, but a lot of people seem to miss it. Just choose the Show items with no data in rows PivotTable option.

Now you will be able to see the Direct and Indirect members, allowing you to drill to stores.

Now, after the drill.

Thursday, February 11, 2010

Oracle Exadata: A Single Source of Truth (New Video)

Over the last 6-9 months we have been releasing a series of videos on YouTube under the banner of "DBA2.0" (a quick search on YouTube will list all these videos). These videos follow the work of a dedicated team of DBAs and business users as they try to get their jobs done in an environment that just keeps throwing up new challenges. Fortunately, Oracle is there to save the day...

This latest scenario deals with data warehousing and specifically Exadata. It is in two parts and the first installment has just been released - "Oracle Exadata: A Single Source of Truth". Here is the story so far:

A stroll through the halls of your IT department may reveal that there is no love lost between the DBAs and business analysts they support. In today's hyper competitive environment, business analysts need to perform more and more predictive analytics and they want the answers yesterday, but managing separate BI and OLAP servers and ensuring fast query performance can be a challenge for DBAs.

Watch this short video to see how the dynamic DBA duo address this challenge using Oracle Exadata, forging a truce with their new business analyst and even getting her to crack a smile - sort of.

Enjoy this video at http://www.youtube.com/watch?v=WgVSu-4Mizs and stay tuned for Part 2 which will be available shortly...

(Please Note: No DBAs or Business Analysts were harmed in the making of these videos)

Wednesday, February 10, 2010

Using MindMapping to view OLAP hierarchies...

http://ofaworld.wordpress.com/2010/02/09/ofa-mindmapping/Now that is cool!

http://ofaworld.wordpress.com/2009/06/10/sample-olap-dml-code-gplcode/

http://ofaworld.wordpress.com/2009/12/01/oracle-olap-mindmapping/